Part 3: How to drive alignment and thrive when business, technology, and data converge

A 3-Part Series: Thriving Across the Worlds of Finance, Business, Data, and Technology

Welcome to the Data Score newsletter, your go-to source for insights into the world of data-driven decision-making. Whether you're an insight seeker, a unique data company, a software-as-a-service provider, or an investor, this newsletter is for you. I'm Jason DeRise, a seasoned expert in the field of alternative data insights. As one of the first 10 members of UBS Evidence Lab, I was at the forefront of pioneering new ways to generate actionable insights from data. Before that, I successfully built a sell-side equity research franchise based on proprietary data and non-consensus insights. Through my extensive experience as a purchaser and creator of data, I have gained a unique perspective that allows me to collaborate with end-users to generate meaningful insights.

Bridging the expertise of business, finance, data, and tech is critical but fraught with issues. We dissect this universal challenge and chart a path to thriving outcomes. In part 1, we introduced a multi-part entry in The Data Score newsletter. In part 2, we diagnosed the underlying issues. In this final part of the series, we explore how to break down the silos between data, technology, finance, and business to more easily get to the good part of a data insights practice: actionable, accurate decisions.

What I hope to offer here is a benchmark of the ideal for setting up the best possible outcomes when the worlds of business and finance come together with the worlds of technology and data.

I’ve seen the good, the bad, and the ugly in my experience working across all these worlds. But I want to be super clear on this: I don’t have all the answers. As I said in Part 1, I’m human, so I make mistakes too. I can’t promise that I’ve been perfect at following these ideals in the past. Nor can I promise that in the future there will not be times when communication will break down while I’m involved in the project.

The benchmark I put forward is to stimulate conversation. I’m keen to hear how everyone who reads this newsletter feels. Finance professionals, business leaders, data professionals, and technologists: how do you break down these silos to generate positive outcomes, even in difficult situations?

Part 1: Framing the problem: why silos are not viable anymore

Original silos and problems to be solved are mostly self-contained.

Problem complexity is accelerating.

Part 2: What goes wrong when the worlds collide?

Talking past each other

Smartest person in the room syndrome

Fear of failure

Part 3: How to break down the silos and align around outcomes

Build empathy.

Create proactive rituals.

Top-down culture

Practical solutions to bridge the gap between silos at an organizational level

Finance, business, data, and tech are all on a collision course to working without silos, but there are blockers to effectively coming together across each area of expertise to create favorable outcomes that are bigger than any one part can achieve on their own. Breaking down the silos will require an effort in the soft skills around collaboration and personal interaction.

Psychological leadership is more important than any positional authority in getting results across the previously separated silos. I believe there is no such thing as positional authority. Employees need to buy into the vision and the plan to get there in order to really engage and be committed.

I’m a huge fan of Wanda Wallace’s work as a career coach, a podcaster, and an author. Her book “You can’t know it all” https://www.amazon.com/You-Cant-Know-All-Expertise/dp/006283598X and her podcast “Out of the comfort Zone” focus on the emotional intelligence skills needed to have spanning expertise across many areas. While her work focuses on advancing to leadership positions, the same skills are needed when working across silos of expertise.

“You're really good at your expertise; you're seen as a super doer, a super executor; you solve any problem, grow everything. But you're not seen as the person who can take the broader role where you don't know all the details. You can't dig deeply. Can't do it yourself you've got to span domains and Trust other people to do to give you solutions and ideas and that is where people struggle” - Wanda Wallace from her interview by the Corporate Bartender Podcast.

One of the key aspects of her work is the importance of questions in building relationships and trust when working across various areas of expertise besides your own. When the level of expertise is needed across finance, business, data, and technology, it’s just not possible for one silo to take ownership and just get it done.

Psychological leadership1 is more important than any positional authority in getting results across the previously separated silos. I believe there is no such thing as positional authority. Employees need to buy into the vision and the plan to get there in order to really engage and be committed.

The three areas to break down the silos heavily lean into the soft skills and emotional intelligence needed to succeed. While there are some aspects that ultimately come down to individuals stepping up to meet these requirements of successfully collaborating, I believe there are things organizations can do to make it easier and second nature when working across silos of expertise.

Build empathy.

Create proactive rituals.

Top-down culture.

I believe that changing culture requires that behaviors change first.

In the details below, I share my views on how to organizationally change behaviors and processes while setting an example from the top in an effort to make the actions more tangible than simply sharing the ideals.

Build empathy

This requires an underlying cultural shift to understand the motivations and aspirations of each group and the outcomes they are trying to achieve.

A critical aspect of generating insight from data is that everyone in the chain can work backward from the desired outcome to the correct solution. This requires everyone to be on the same page, and empathy is critical to facilitating that. It’s important that each world of expertise—data, technology, finance, and business—properly understand the outcomes needed by each group. This requires an underlying cultural shift to understand the motivations and aspirations of each group and the outcomes they are trying to achieve.

In the examples I provide, it’s a mirror request where both sides of the relationship are sharing information and asking questions to make sure they are aligned. Both sides are clients of each other. It’s not a one-way conversation.

Just a few examples; there are many more relationships:

Data scientists and business end users:

A good data scientist sits down with the business users of the tools and applications they are creating to understand the end goals of the analytics. Even better would be to make decisions based on the data and analytics product in parallel with the end user.

More data-driven product companies should regularly “eat their own dog food” in their processes to properly understand how to generate insights from the data and therefore make decisions like their customers and stakeholders would. Walking in their shoes and using the same resources and processes makes it easier to improve their data and insights product.

Joint decision-making is ideal.

A good business or investment decision maker would also sit down with the data scientists, engineers, and data stewards2 to understand the day-to-day workflow behind the data product they are using. What is hard about the process? Where are the pain points? What’s on the product roadmap that’s exciting for the data and tech teams? This will help the business stakeholder prioritize their requests to focus on the highest impact and lowest effort items first.

Data Scouts and investment teams:

A good data scout will hear the request for a specific dataset type from the analysts and portfolio managers but recognize there’s a greater “why” behind the request and work to understand that in order to be more effective in sourcing the most effective data to address the need.

The investment teams should treat the data scout as a client too, sharing the why behind the request and being available to answer questions during the data discovery and vetting process. This can’t be a one-way request without providing the support necessary as well as hearing what the blockers could be.

Data engineer and data operations (yes, even when working across data and tech silos, empathy needs to be built):

A good data engineer will sit down with the data operations team to understand the problems and pain points they face each day and provide technical solutions to free up the team's time for more value-added activities. They will figure out what can be automated in the data pipelines and what tools can be provided for those in the process of maintaining the quality of the data and meeting high governance standards3.

The data ops team should treat the data engineering team with the same level of mutual respect and trust, sharing clear feedback on the outcomes needed but also understanding what the engineers workflow is, what tools are available, and their limitations. The data ops team needs to be available to support and answer ongoing questions from the data engineers, who will certainly run into blockers at some point while automating the data pipeline.

In the process of sitting down and understanding each team’s jobs to do, it's important to be open to answering questions and investing in the broader team. The more that a data steward can understand the end goal of the analysis, the more they are able to assess the correct actions to manage data anomalies. The more an investment professional can understand how the data is collected and cleansed, the better they will be at using it to make decisions. The better the data engineer understands the use of the data, the more effectively they can simplify the data pipelines while preserving the valuable parts of the process.

Change culture through changed behaviors, process and training

It’s one thing to say what the ideal empathetic situation should be. It’s another thing to do. I believe that changing culture requires that behaviors change first. This can be implemented through changes in the workflow. Key steps of the data product design and execution process must include key stakeholders across the silos with the goal of being able to walk in their shoes (at least to the best of each team’s ability as non-experts in the other silos).

Cross-functional teams organized around the project:

When a new project is kicked off, especially when something brand new is being created, each of the stakeholders should come together under one temporary team focused on a well-defined goal for the project.

Please note that the actual organizational reporting lines will remain unchanged. The goal of the project aligns the teams (and could become part of the OKRs4 if you follow that approach to goals and alignment).

The cross-functional team breaks down silos quickly as the tactical product team must work to achieve the outcome of the project.

This is different than taking requirements from a stakeholder, going away and doing work, and then coming back with a data product.

Formal Training sessions:

It’s hard to have empathy for other groups if they don’t understand what they do on a day-to-day basis or what problems are being solved. Business, data, and tech professionals need to learn enough about the other worlds so they can be effective in their own work in support of the other stakeholders in the project.

Examples of formal training sessions:

Teach-ins on how financial market participants make investment decisions and how data is incorporated into the process to help the data professional understand the business outcomes needed.

One of the most effective experiences for data and technology professionals is having the ability to make investment decisions based on their own data. They will find the approach to making an accurate investment decision, for the right reason, is different than what they imagine.

For example, they would be trained on how to identify critical investment questions, what’s priced into the share price, and then seek areas where they could have a view different from consensus5.

Often, I find data professionals can identify companies performing well and poorly using their data product but are very lost trying to understand what’s priced in as the reference point of comparison for the insight they learned from the data. If the market already prices that information in, regardless of how novel the data product is, there isn’t an opportunity for the price change to reflect new information.

Teach-ins for financial professionals on the latest technology and applications available to them demystify what’s happening behind the scenes and provide them opportunities to try out the capabilities of the data they use in their processes. It sparks creativity in using data and technology while also helping them get comfortable with the fact that, behind the scenes, data products and data science techniques are not black boxes6.

Teach-ins for data engineers on the manual workflows followed by data stewards to cleanse and enrich datasets to better identify pain points. Topics could include how they know when there’s a problem with the data harvest or how they tag and enrich the data with correct entities, symbology7, categories, geographies, etc. It will help build an understanding of the valuable work being done by the data operations team and lead to the development of tools and pipelines that improve the execution of the team.

A warning on formal training!

Importantly, time must be set out for training in the regular planning cycle for it to be effective. Offering training sessions to workers who are currently operating at 110% of their potential is essentially viewed as a personal insult. The employees view the training as an obstacle to getting work done or as one more item they must complete on top of everything else they already do to stay afloat while achieving their goals. When there is space in the deliverables plan for training and it doesn't make the staff work harder to complete their usual deliverables on time, you'll find that most individuals are pleased to have access to training to improve their expertise.

Lunch and learn sessions:

These less formal sessions should be set up to champion big wins by stakeholders. The primary goal is to share a very specific story of how the team generated success and what problems they overcame in the process. Coach the presenters to make the presentation as entertaining as possible (for example, using “the hero’s journey” structure). It’s important to combine the story with free food and beverages, as it’s really a celebration. The entertaining story-telling allows the audience to learn and also builds empathy for the other parts of the team. This has the secondary benefit of creating social proof8 for new use cases of data and technology and creating a bit of FOMO9 for the next potential user of the product.

Examples of lunch and learn topics:

How the data ops team caught a major data error, corrected the issue, and delivered actionable insights to the investment team. I like this topic the best because the data operations team is usually the unsung hero of the story. 99.9% of the time, when everything goes well because of a good process, the feedback is “that’s expected.” But when something goes wrong 0.1% of the time, it becomes a fire drill. Worse, it could potentially be a new narrative because it’s natural for the error to become the focus instead of all the quiet successes. The best days for a data operations professional are when a crisis is avoided and no one downstream even knows. The lunch and learn sessions would give the opportunity for the success story to be shared.

How the portfolio management team beat the market with data. What was the investment debate, what was priced in, and how did the data and analytics shape the investment decision? It’s important that the entire organization understands the benefit of their work because it helps motivate the team and also makes it easier to be aligned on future projects.

How the data engineering team was able to automate processes in the pipelines, improve accuracy, and free up time capacity. Perhaps it’s a new application of machine learning or a new approach to unit testing. Sharing success will help others understand what is possible in future projects and spark ideas for collaborating to reduce tech debt10 or find efficiencies to expand coverage beyond current capacity.

What other examples would you offer of a hero’s journey stories to share during lunch and learn events.

Quick, public celebrations of achievements:

It’s important to have vocal, public celebrations when business success is achieved leveraging data products, a new product is shipped, new problems are solved, new individual milestones are met, or something fun has happened.

It needs to be something quick and positive, allowing for the success to be acknowledged across the firm.

In Evidence Lab, we would ring a gong when successes were achieved. The team would step up and strike the gong (it was quite loud!). Work would stop for a brief moment as the entire floor would applaud, not even knowing what the success was. Then the success or milestone would be revealed out loud by the team in just a few words. Naturally, those working on a similar problem or having a related interest would follow up to learn more. The breakthroughs shared were both major and minor events. We didn’t have a threshold for what counted as success. But we all knew we wanted to hear that gong ring often.

Work would stop for a brief moment as the entire floor would applaud

Create proactive rituals for discovery of blockers

The most important part of the process when data, technology, and business professionals collaborate is at the project scoping stage. The brainstorming stage is always fun, especially with the “yes, and” improv-like approach to getting all the ideas on the table without rejecting any (maybe an adept moderator is able to gently steer the group toward ideas more likely to be fertile grounds for real work). The project scoping stage is when reality sets in about what is and isn’t possible. This is when it’s most common for the silos to talk past each other, turn the process into a battle of wits, and also begin to fear the failure of the project (without speaking about it). Changing culture requires behavior changes, and in the process, creating easy-to-follow steps and rituals in the scoping process can set the project on the right path.

Question bursts

The power of simple questions

In my own experience earlier in my career, I really worked hard to make sure I asked smart questions. I was far too concerned with how I would appear in the eyes of others. Part of that was being a sell-side analyst who was responsible for asking smart questions to uncover information on earnings calls while also being seen as someone who’s bright and should be trusted for investment advice.

At some point in my career, my mindset switched. I reached a level of experience where I stopped caring about looking smart and would enjoy asking the most direct, obvious, dumb question. I think it was the most valuable thing I could do as a leader to break through the noise of jargon and the ambiguity of unstated motivations and show it’s ok to not be the smartest person in the room.

The simplicity of a question that’s positive in tone to seek a greater understanding of the problem or solution is important. It’s important that they are open-ended questions, such as

“What’s going on here?”

“How are we going to do that?”

“Can you help me understand what that means?”

The positive tone of these questions matters a lot in making sure they land constructively.

These give the expert on the topic the space to explain it again without the jargon and get right to the point of what matters most.

A process to make it easier for people to ask questions

After a while, the ritual of the question burst will become second nature

However, many people do not have the confidence to ask the simple questions that will unlock everyone’s understanding, so a process and rituals should be put in place to make it easier for the group.

Question bursts can achieve this11. Here’s my approach to this. It can be done live or as a pre-scoping meeting step. Each stakeholder in the project is asked to come up with as many questions as possible about the project, independently and anonymously. If live, set a time limit of 5 minutes. The lists are combined, and the most upvoted questions are addressed (Slido is a great resource for this).

The questions could end up being about highly informed, detailed nuances of the project, or they could be as basic as possible. Importantly, the question lists are created independently. It’s also important that the goal is to generate as many questions as possible under time pressure. This lets the real uncertainties surface. It also removes any fear from the team about asking “dumb questions,” because most people would have the same question but not want to be the one to ask it.

After a while, the ritual of the question burst will become second nature and is often not needed once teams are working well. Soon enough, stakeholders will be comfortable asking questions aloud that reveal they don’t understand aspects (or all) of the project because they will have the psychological safety of knowing that everyone’s aligned on achieving the best outcomes and that it’s best to address uncertainty before the project begins.

With the questions out in the open, the scoping work can begin to put together a plan working backward from the end outcomes required, the data and analytic deliverables to support the outcomes needed, the cleansing and enrichment needed, the technologies required, and the data sources as inputs in the process.

After moving from the brainstorming phase to the project scoping phase, question bursts can be brought back if there is any sense of discontinuity between the teams. The question-burst approach will surface any new uncertainties, which could allow the team to return to brainstorming or more precisely scope the solution.

Pre-Retrospectives / Pre-Mortems

A pre-retrospective openly discusses how the project might go wrong

Blameless retrospectives are an important final step in the process of any project or feature enhancement in the tech world to review the project after completion to identify successes, failures, and lessons learned. However, when business, technology, and data professionals are trying to build the culture that allows for alignment between the groups, a pre-retrospective or pre-mortem is important to implement as a step in the process.

What can go wrong?

After the initial scoping stage, a pre-retrospective openly discusses how the project might go wrong. Like the question bursts, the process involves independently brainstorming ideas about how the project will fail. The brainstorming should be a 3- to 5-minute exercise. Once the individual lists are generated, they should be combined, and then the participants should upvote the most concerning ways the project could fail. Once the list is ranked, it’s important to discuss openly what would be done proactively to address the concerns and identify how the group might know early that these negative outcome scenarios are increasing in probability.

This could raise concerns proactively, such as uncertainty about the quality of the data or outputs, the time it will take, the service level agreement between the groups, or even the relevance of the solution to the problem at hand.

Proactively thinking about what will happen downstream, post-data product delivery

A second aspect of the pre-retrospective is walking through the potential outcomes of the analysis and what the end users would do with the data in various scenarios. To do this, it needs to be stated:

What the base-level expectation is for the outcome of the analysis

What the delivered data product solution and specific metrics will be.

As a made-up example of using alternative data for an investment decision:

Base line: We are expecting to prove a specific consumer media company is generating 10% faster revenue growth than their competitors and better than consensus expects over the next 4 quarters.

Delivered Metric: We will provide 4 metrics: unique web visit growth y/y, daily active app user growth y/y, credit card revenue y/y, and overall revenue estimates y/y.

To be clear, this isn’t about doing work to show the baseline view as a measure of project success. The data insights will be whatever the data shows. So, at this pre-retrospective stage, we should ask questions to test other scenarios:

What would happen if the opposite was shown in the data and the company was the weakest in the delivered metrics and analytics in the latest data?

What would happen if the metrics showed exactly what was expected from consensus but were not better than consensus?

What would happen if the output showed even faster revenue growth for the company than the 10% expectations?

This process catches potential misalignments between the metrics and the outcomes needed.

“Wait, how do I go from unique web visits to a revenue estimate versus consensus?”

Or “I’m not sure that measure is aligned with how the industry operates; can we change the metric to _____?”

The process also gets the end users of the analytics thinking about scenarios and opening them to the possibility of different outcomes.

“I think it's going to show my baseline expectation, but if it didn't, I would change my view”.

It may also lead to more open-ended questions that could fit into the question burst process or a what can go wrong brainstorm like “How will we trust the outcomes of the analysis are accurate?”

On the other side, it’s possible that this process reveals that the process to this point has been a series of “false yeses” in order to not be confrontational, but the end user is actually not on board with the plan at all. It’s worth slowing down or revisiting steps in the process if this happens.

Some examples of when pre-retrospectives would have been beneficial in my experience (appropriately redacted)

A pre-retrospective would have found that an analyst who asked for a forecast of what would happen based on new capacity coming online in their sector based on different geographic demand and constraints was not actually on board with the random forest approach to estimating the demand because what they really wanted was a scenario tool to explore scenarios and not a specific estimate.

A pre-retrospective would have revealed which designed metrics based on web-mined data were critical to the operations and which metrics would have been seen as just “interesting” or “nice to have” (which is the nice way businesspeople say not useful at all and shouldn’t have been created in the first place).

A pre-retrospective would have found out that the business stakeholder didn’t think through the inputs they wanted run in the optimization model and would have rejected scenarios that showed less capacity reduction than they wanted to show, and we could have reworked the assumptions up front instead of delivering and saved the project from the beginning.

The key is that the scoped plan is proactively adjusted to reflect the learnings from the pre-retrospective.

The Analytics Power Hour podcast conversation referenced in Part 2 also addressed this concept:

Val Kroll: What is the business case that we’re trying to prove out? And being able to step through those questions together when you have a little bit more breathing room and even going all the way through the end, like what is the outcome matrix? So if this is their outcome, what actions are we going to be taking? … it’s really making sure that all your efforts are gonna be tied to action and there’s just a lot of goodness there, but they’re, you’re not doing all the education at the end when you’re presenting the results or the analysis. They’re bringing them along that journey. And so then you’re making them aware of some of the, the caveats of this approach or, some loopholes with this data that we’re gonna have to work around.

Culture is set from the top of the organizational chart

Two cultural paradigms for dissolving silos must be driven from the top: psychological safety and “everyone is a client.”

Psychological safety

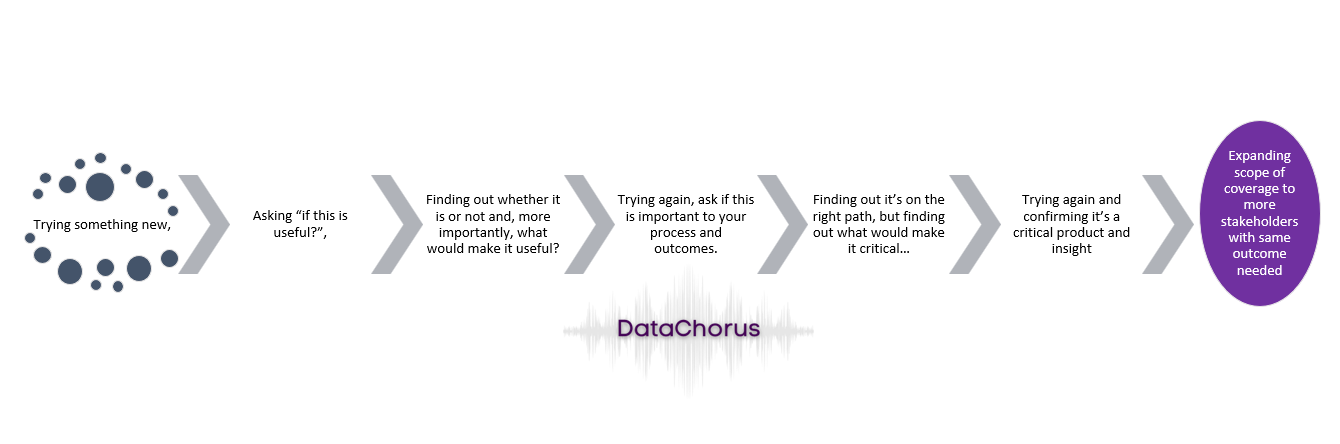

Wrapped around all of the above is the need for management to create a space for risks to be taken within the bounds of proper risk and compliance management. Small tests of “crazy” ideas should be encouraged to find out if they could work or not. When they don’t work, they need to be celebrated as wins. There needs to be support for:

Trying something new,

Asking “if this is useful?”,

Finding out whether it is or not and, more importantly, what would make it useful?

Trying again, ask if this is important to your process and outcomes.

Finding out it’s on the right path, but finding out what would make it critical…

Trying again and confirming it’s a critical product and insight

Expanding scope of coverage to more stakeholders with same outcome needed

There’s something special about that agile process with fully aligned teams that cuts through bureaucracy and politics and gets right to creating something valuable quickly. But it only works if everyone involved is not shamed for failing multiple times or challenged on their use of resources.

That starts at the top of the organization, setting appropriate guidelines and guardrails for low-risk innovation and R&D, and then scaling the products and solutions that have product/market fit12 to a wider audience.

What not to do in an R&D strategy

There are two actions that undo the psychological safety benefit, especially in a financial market context, and should be avoided. Both are at opposite ends of the spectrum but lead to unfavorable outcomes.

Error 1: Allowing the R&D effort to be run aggressively, building new products without review and guardrails, allowing small tests to be skipped, and launching fully functional projects.

Most likely, in this error scenario, the work is done via a waterfall methodology instead of an agile methodology13.

It’s highly unlikely for it to work because it’s rare for data and tech teams to remain aligned with business and financial decision-makers needs when working in isolation over extended periods of time, especially as financial markets evolve rapidly.

Furthermore, with more time spent building instead of iterating, overfitting14 of models becomes more likely because there is less time spent testing small product releases and adapting.

This leads to low success rates and a new fear of failure.

Error 2: Following a tight R&D approval process with tight guardrails that limit the ability to use resources.

The burden of getting an R&D test approved means the team will require a high level of confidence that it will actually work and not be seen as wasting resources. It’s false confidence that R&D will succeed; if it was known it would work, it would have been done already.

This will lead to a bland R&D process and often unintentionally result in a follower strategy by letting other organizations get ahead and then quickly trying to catch up. In financial markets, by the time the competition is willing to share their innovation and investment success story publicly, the opportunity for investment is already over and the alpha generation15 opportunity is gone. Being early matters a lot in investments.

The R&D team will instead spend more time trying to innovate ways to squeeze more alpha out of the existing data products in production, which gets harder as more time passes due to others in the market catching up and using the same “table stakes” data and analytic ideas to create products.

This leads to low success rates and a continued fear of failure.

“Everyone’s a client.”

A key principle that my cohort of UBS Evidence Lab leadership truly believed in is that “Everyone is a client”. Perhaps this is biased by my experience working in an investment bank for all those years, but in an investment bank, there is no higher priority than the client. The client is the ultimate focus for service and support. So, for me, this is why treating everyone internally as a client was the reiterated philosophy of the UBS Evidence Lab management team I was part of.

Executives should establish and track this "everyone is a client" mindset at all levels across business, data, and technology. Each handoff in the process between functional expertise needs to be a clean handoff.

In a DevOps16 structure of continuous feedback and development, each team’s function requires the acceptance of the next team that the work was implemented according to plan and is causing further problems. Effectively, everyone owns the product and outcomes and can take actions to succeed or stop the process if something is wrong.

This also means that the data and technology professionals should be seen as clients of the business professionals too. Of course, the business professional is asking for help in achieving external customer outcomes, but in the process, they are bringing ideas and work to the data and tech teams, which makes the data and tech teams clients of the business professional.

This handoff needs to be well invested in by the business professional, who needs to be willing to take feedback and adapt their thinking based on the advice of data and technology professional’s, who in turn also need to put in the time to understand the business professional’s outcomes.

The analogy of a Formula 1 team is appropriate here

In Formula 1, there are two star drivers on each team, but there are also 1,000 plus scientists, engineers, and specialists who make them successful. The goal is winning, which aligns the team. Each person on the team has a job to do, but it only works if they are all aligned around the single outcome of winning.

The drivers follow the guidance from the team and proactively share feedback back to the team, all taking ownership of the outcome. And are communicating the feedback loop in a very calm and clear way (usually), despite the intense circumstances of the competition.

And while the fans adore the drivers, the success of the drivers is shared back to the team after every win.

Measure repeat usage, acceptance rates, and relationship quality

KPIs are important to understanding the health of any business. So, data product businesses should be exceptionally good at measuring their own performance (plot twist: they aren’t).

Working backward from outcomes solved with data products means that the end client’s repeat usage and the impact of the usage are the key measures to assess the end client’s acceptance, which should ultimately align the teams.

Measure the behaviors in the workflow and the quality of relationships

Measuring the workflow from idea through product and feature delivery should also be measured to understand acceptance rates between the functions within the supply chain, from feedback through delivery. Acceptance versus rejection of work by teams and individuals dependent on the work up the supply chain will help monitor potential breakdowns in the handoffs between teams. In addition to acceptance, it’s important to try to quantify the soft skills in the relationship with a simple rating system. Successful data products require a team effort across the worlds of business, finance, data, and technology, and it’s not possible for any one person to be so important that their soft skills can suffer. In other words, there’s not room for self-focused individual experts who do not take care of others in the workflow as clients. Measuring the execution of high emotional intelligence at an individual level holds everyone accountable to the standard that everyone is a client, along with the behavioral measurement of the acceptance rate.

Closing Thoughts: One Team, One Dream

The team must be built from a diverse set of expertise that compliments each part to create something greater than an individual or silo could accomplish on their own.

As discussed in Part 1, the capability of data and technology to solve complex human-led problems is forcing the worlds of business, finance, data, and technology even closer together. The silos will need to be broken down, which introduces new challenges in collaborating to generate greater outcomes than any individual part could achieve on their own (Part 2).

The team must be built from a diverse set of expertise that compliments each part to create something greater than an individual or silo could accomplish on their own. The challenges of worlds colliding can be solved through quality human-to-human interactions fueled by high emotional intelligence.

Saying this is not enough. As discussed in this final entry of the 3-part series, the behaviors of each part of the team can be influenced by investment in development and training and by implementing a proactive process that drives proactive open communication. Ultimately, this is about culture being set from the top and measuring performance to hold everyone accountable.

The end goal is one team achieving one dream.

These entries in The Data Score are a benchmark I’m offering for comparison with your approach in your organization.

How does this benchmark compare with what’s worked for you?

What other approaches have been successful in systematically breaking down silos?

Links: Part 1 | Part 2 | Part 3

- Jason DeRise, CFA

Psychological leadership: Leading others through emotional intelligence, relationships, and communication.

Data steward: Person responsible for data governance, including quality, availability, and security.

Data Governance: “Data governance is everything you do to ensure data is secure, private, accurate, available, and usable. It includes the actions people must take, the processes they must follow, and the technology that supports them throughout the data life cycle.” - Google Cloud’s definition: https://cloud.google.com/learn/what-is-data-governance#:~:text=Get%20the%20whitepaper-,Data%20governance%20defined,throughout%20the%20data%20life%20cycle.)

OKR (Objectives and Key Results): A goal-setting framework focused on transparent, measurable outcomes that align the organization. For more details check out the book “Measure What Matters” https://www.amazon.com/Measure-What-Matters-Google-Foundation/dp/0525536221

“The Consensus” is the average view of the sell-side for a specific financial measure. Typically, it refers to Revenue or Earnings Per Share (EPS), but it can be any financial measure. It is used as a benchmark for what is currently factored into the share price and for assessing if new results or news are better or worse than expected. However, it is important to know that sometimes there’s an unstated buyside consensus that is the better benchmark for expectations.

Black box model: A model whose inner workings are hidden or not understandable to the user.

Symbology: Reference data in the form of financial identification systems (such as stock ticker identification), joined to the underlying data associated with the entities in the dataset.

Social Proof (or informational social influence): “a psychological and social phenomenon wherein people copy the actions of others in choosing how to behave in a given situation. The term was coined by Robert Cialdini in his 1984 book Influence: Science and Practice. Social proof is used in ambiguous social situations where people are unable to determine the appropriate mode of behavior and is driven by the assumption that the surrounding people possess more knowledge about the current situation.” - Wikipedia definition: https://en.wikipedia.org/wiki/Social_proof

FOMO: Fear Of Missing Out

Tech Debt (Technical Debt): the cost of reworking previously implemented code that is no longer suitable for business needs, typically created when applying quick solutions in the development process. The replacement of the initial work with sustainably written code reduces the technical debt, improving the efficiency of the product.

Question Bursts: For more info on the benefits of question bursts, check out: https://mitsloan.mit.edu/ideas-made-to-matter/heres-how-question-bursts-make-better-brainstorms

Product/Market Fit: The ability of a product to meet the needs of customers, generating strong and sustainable demand for the product.

Waterfall versus Agile Methodologies: “Agile project management is an incremental and iterative practice, while waterfall is a linear and sequential project management practice.” - Atlassian definition: https://www.atlassian.com/agile/project-management/project-management-intro

Overfitting: When a model matches the training data very well when back-tested but fails in real-world use cases when the model is applied to new data.

Alpha: A term used in finance to describe an investment strategy's ability to beat the market or generate excess returns. A simple way to think about alpha is that it’s a measure of the outperformance of a portfolio compared to a pre-defined benchmark for performance. Investopedia has a lot more detail https://www.investopedia.com/terms/a/alpha.asp

DevOps: The combination of Development and Operations in a continuous cycle typically used in software development. The goal is for applications to be created quickly and deployed into production on an ongoing basis, where the operations team is involved in the process and provides consistent feedback to the development team to handle new feature requirements and the removal of bugs.