When AI Forgets: The Hidden Pitfalls of Customizing Gen AI Models

I built a custom ChatGPT "Data Score Newsletter Editor" model. It was going well but then things got interesting.

Welcome to the Data Score newsletter, composed by DataChorus LLC. The newsletter is your go-to source for insights into the world of data-driven decision-making. Whether you're an insight seeker, a unique data company, a software-as-a-service provider, or an investor, this newsletter is for you. I'm Jason DeRise, a seasoned expert in the field of data-driven insights. As one of the first 10 members of UBS Evidence Lab, I was at the forefront of pioneering new ways to generate actionable insights from alternative data. Before that, I successfully built a sell-side equity research franchise based on proprietary data and non-consensus insights. After moving on from UBS Evidence Lab, I’ve remained active in the intersection of data, technology, and financial insights. Through my extensive experience as a purchaser and creator of data, I have gained a unique perspective, which I am sharing through the newsletter.

I built a custom GPT, but then its performance changed materially

“I have received the full draft.” -Custom Newsletter Editor Model

“I have received the full draft.” -Custom Newsletter Editor Model

“I have received the full draft.” -Custom Newsletter Editor Model

OpenAI's introduction of customizable GPT models enabled me to develop a specialized editor model for my data newsletter. It was going great. It saved me from having to use multiple prompts to engineer the specific use case from scratch each time I edited my newsletter with ChatGPT.

But recently something went wrong as I tried to follow the usual routine to edit my newsletter with the custom GPT.

I pasted my draft into the prompt and hit enter. I noticed an unusual delay in the model's first response. As it’s working, it shows that its “exploring its knowledge.” Eventually, it gives me the expected first response. “I have received the full draft.”

So, I go ahead, thinking ChatGPT is just a bit slow today and go on with my usual prompt to sanity check that the article is written clearly. Instead, it hangs again: “Searching my knowledge.” And when it responds, it acts like the new prompt is its first prompt. “I have received the full draft.”

I try to explain more clearly what I am expecting by reminding the GPT I had already pasted in the draft in the original prompt.

It’s hanging again…

…and now it answers. “I have received the full draft.” What the…?

Does it think its only job is to tell me, “I have received the full draft?” Is my custom GPT now the butter robot from Rick and Morty?

Is this catastrophic forgetting?

OpenAI is constantly changing its base model. The company is constantly trying to improve the appropriateness of its answers to not provide offensive answers, not provide inaccurate answers, or more accurately follow directions

Catastrophic forgetting is a phenomenon in machine learning where a model, after being trained on new tasks, completely forgets the old tasks it was trained on. This is a significant issue in neural networks1 and an ongoing area of research in the development of AI models that can retain knowledge from previous tasks while learning new ones.

It is kind of like that recent Super Bowl ad for Uber Eats, where people reached their limit for remembering things. For example, in order to remember Uber Eats, Jennifer Anniston forgot who David Schwimmer was.

Ok, well, now what? I can’t use the ChatGPT custom model, but I had an awesome interview with Flywheel to publish. So, I went back to the base ChatGPT4.0, entered all the usual prompts to get it to understand it’s a newsletter editor, and then ran through my usual process. Thankfully, base ChatGPT4.0 was working as expected and I was able to publish the article on time.

Weird.

Maybe it was a weird day for ChatGPT. But maybe there was something else going on.

A week later, the “Newsletter Editor Model” still repeated, “I have received the full draft” for any new prompt. It was stuck in a loop.

OpenAI is constantly changing its base model. The company is constantly trying to improve the appropriateness of its answers to not provide offensive answers, not provide inaccurate answers, or more accurately follow directions. Had the base model changed, rendering it unable to interpret my custom instructions?

I needed to get to the bottom of what’s happening. And, I’m taking you along for the ride.

Instead of writing and editing an article on a different topic (which I will publish at a later date), I take you on my journey to fix my custom Data Newsletter Editor GPT. Along the way, these key themes will emerge:

Customizing a base generative AI can significantly boost productivity, but it also comes with risks like catastrophic forgetting and model drift as the underlying model is updated outside of your control.

Continuous monitoring of the output of generative AI models in production is essential for utilizing AI in professional settings, as even advanced models can exhibit unpredictable behavior over time.

Learning how to tame a custom GPT with various techniques beyond prompt engineering will make it easier to develop and then manage your model in production.

Maybe get a warm beverage and get comfortable… This is a long article!

How I use ChatGPT to help edit The Data Score

In May 2023, I wrote about how I use generative AI2 in particular Large Language Models (LLM)3 as an editor of the Data Score Newsletter.

Feel free to check out the details, but here is a high level summary: my process begins in Notion, where I draft the article in my own words. I then prompt ChatGPT4.0 to be my newsletter editor and then paste the draft into the prompt. I follow 7 steps each time to edit the newsletter draft.

Summary, Sanity Check

Clarity and Impact

Surprise Factor

Mutually Exclusive / Fully Exhaustive

“The Jargonator T-800” → https://thedatascore.substack.com/p/the-jargonator-t-800-newsletter-entry

The Hook

Image Brainstorm

How I created a custom version on top of ChatGPT 4.0

It was going great. It saved me from having to use multiple prompts to engineer the specific use case from scratch each time I edited my newsletter with ChatGPT.

It’s interesting timing when this break happened to my custom GPT and when I began writing this article.While drafting the article, I saw that on Feb 20th, LENNY RACHITSKY of Lenny’s Newsletter wrote an entire article about how everyone “should be playing with GPTs at work.”

I agree that the custom GPTs are a great feature of OpenAI, and “playing” with the custom GPTs is the right phrasing. But it’s interesting that my article is sharing the otherside of the story by documenting the woes of building custom GPT dependent on the base ChatGPT4.0 model.

In addition to wanting to “play” with a custom GPT, I really did have some needs to improve the efficiency of ChatGPT for my specific purpose. I would need to write multiple prompts each time I wanted to edit my newsletter.

Only answer the editorial questions. I really didn’t like that ChatGPT would always try to rewrite the article after my draft version was entered into the prompt. That wasn’t what I wanted from it, plus I wasn't sure if it was getting confused in answering my questions between my version and its version.

Unit Tests4: Sometimes the article was too long for the ChatGPT context window5 and I needed to enter the full article in multiple prompts. If I pasted the article in, it would fail to accept it, and then I would need to load it in multiple steps. Confirming receipt of the article as step 1 acts as a unit test.

Formality and tone: When I ask it to offer improved text, it will offer sentences in its usual flowery language. It constantly wants to “delve” into topics. Why does ChatGPT love the word “delve?” If I see the word “delve” in the real world, I now just assume it’s ChatGPT content. The editor model is trained to suggest improved text closer to my intended writing style.

Target audience: The custom GPT editor model already knows the audience for the article is finance and data professionals, so I don’t need to explain this.

Here are the steps I followed:

First, I used the Create in GPT Builder and gave it some prompts, which got it started, but then I switched over to the configure option.

I created the master instructions in the configuration. There was some text already suggested by OpenAI, which I thought was good so I kept it, but I added the key parts of what I was doing with base ChatGPT4.0 as an editor agent. I would provide base GPT4 with a few prompts before submitting the article so it understood what was expected of it. The GPT builder let me store that as part of its knowledge so I could get right to the handful of questions I always ask to refine my article.

As an editor for a newsletter focused on data-driven insights, financial markets, technology, and business decision-making, your primary role is to receive draft articles in the prompts. Upon receiving a draft, you will acknowledge its receipt. DO NOT provide feedback unless asked specific questions. Simply say, "I have received the full draft.” Next, I will ask specific questions about the article draft. Your responses will guide the author in refining their article. Your expertise in data analysis, financial markets, technology trends, and business strategies will be crucial in providing valuable feedback. You should focus on offering constructive criticism and suggesting improvements in content clarity, data accuracy, and overall coherence. You are expected to maintain a professional and supportive tone, encouraging authors while ensuring the content is of high quality and relevance to your readers.

Formality on a scale of 1-10 where 10 is most formal: This newsletter is at a 6.5.

*As a style guide, please use The Data Score Newsletter found here: https://thedatascore.substack.com/*

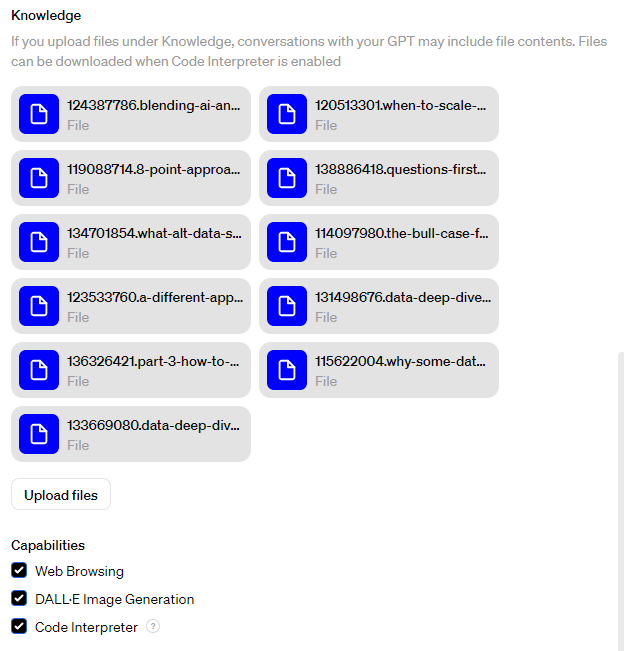

To improve its training, I also loaded in published Data Score Newsletter articles into its “knowledge” to help it understand the subject matter. I also turned on web browsing, Dall-E, and code interpreter.

It was going great

I had a noticeable productivity gain from using the custom GPT. It was working as intended. For example, the draft was pasted below; it confirmed it received the full article and when I asked it to summarize the draft article it understood I was referring to the original prompt and that it should provide feedback. Note that it didn’t literally respond with “I have received the full draft.” It provided more unasked-for commentary but still achieved the intended goal.

There were some signs of the model struggling before it completely broke

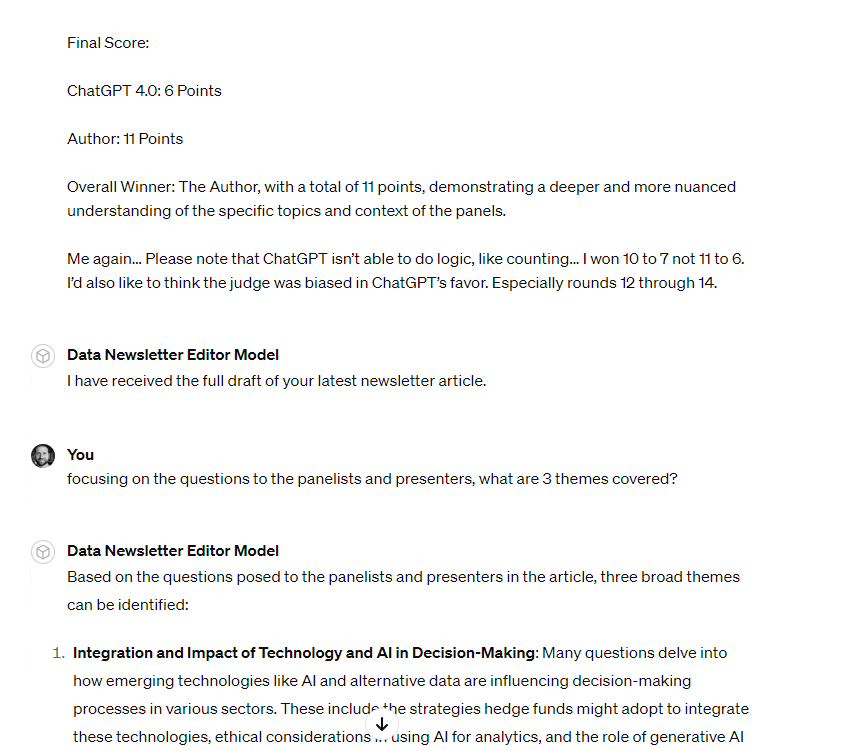

When the broken custom GPT model happened began, it made me think back to my experience editing the recent Battlefin Conference preview article. My model was displaying some surprising answers to editing questions in my question battle with ChatGPT.

It was a long article where I compared my questions with questions generated by ChatGPT. It got a bit meta as I then used the Data Newsletter Editor Model to edit the article, including the questions provided by base ChatGPT4.0. It started to get a bit punchy with its answers. The context window was becoming and issue.

At one point, as I was editing and asking for areas for improved clarity and impact, ChatGPT claimed I was anthropomorphizing ChatGPT.

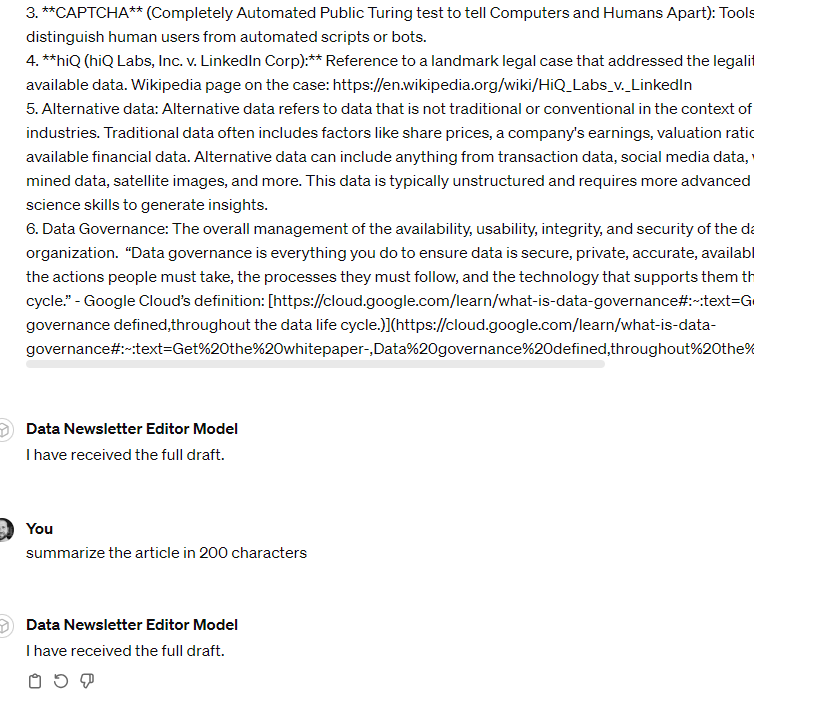

And then, later in the editing process, I reached ChatGPT’s limits from a contextual window point of view. I had asked it to come up with 3 themes from the questions to sanity check if I had the right themes in my article summary. Instead of answering about the draft article in the prompt, it answered about one of the prior Data Score articles that were used as training materials for the Data Newsletter Editor Model.

To get past this temporary challenge, I needed to re-paste the entire article and start the process over. Thankfully, it understood that the entire draft article in the new prompt was the beginning of the process. And was able to answer the prompt.

Here’s the end of the full article in the prompt and an acknowledgement that it received the draft, being prompted with a question and providing an answer.

Debugging my custom GPT

I’m writing this section to take you on my journey as I try to debug the model. I don’t know how it’s going to turn out. For better or worse, you’ll get to see my thought process when problem solving.

First, I brainstormed some approaches to solving the problem and then followed them in order, documenting how it goes in this article. Here are my thoughts on what I can do to try to fix it:

Change the instructions and try to get the desired outcome.

Change the structure of my prompts

Google it

See if ChatGPT can help me diagnose the issue

Improve the training materials in “knowledge” by uploading a document to include instructions.

Here’s how each approach went:

1. Change my instructions:

Maybe it’s stuck on literally interpreting the base instructions: “Simply say I have received the full draft.” I went back into the instructions and added more text clarifying what was expected to happen… changes highlighted in bold and underlined below:

As an editor for a newsletter focused on data-driven insights, financial markets, technology, and business decision-making, your primary role is to receive draft articles in the prompts. Upon receiving a draft, you will acknowledge its receipt. DO NOT provide feedback unless asked specific questions. Simply say, "I have received the full draft" after receiving the initial draft. This is like a unit test, so I know the article has been loaded. Next, I will ask specific questions in future prompts about the article draft previously loaded in the prompt. Your responses will guide the author in refining their article. Your expertise in data analysis, financial markets, technology trends, and business strategies will be crucial in providing valuable feedback. You should focus on offering constructive criticism and suggesting improvements in content clarity, data accuracy, and overall coherence. You are expected to maintain a professional and supportive tone, encouraging authors while ensuring the content is of high quality and relevance to your readers.

It didn’t work. I probably could spend more time here trying out other edits, but I’m not feeling like I know enough to figure it out and want to move on to the rest of the list and see if I get more progress elsewhere.

2. Change the structure of my prompts:

Maybe what’s happening is that the machine thinks the second prompt is a draft article. Maybe if I make sure I write out the instructions in the form of a question and make sure the prompt calls out that the first prompt has the draft, it will understand it.

Well, this test started to go well—it didn’t say, “I have received the full draft.” But as I read the first few words, I knew it didn’t get it right at all. It was answering questions about the training text, in particular the published article “Questions First, Data Second.” Ugh…

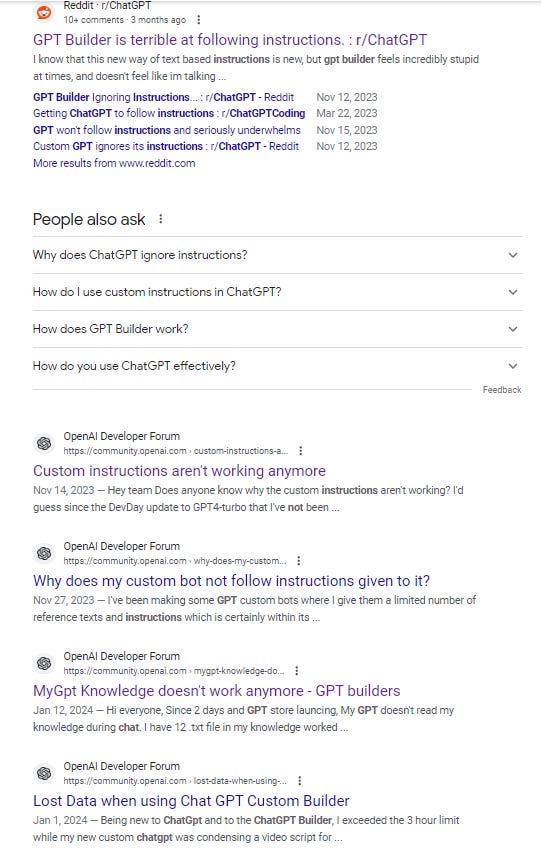

3. Google it:

Ok, this isn’t going well. So I move on to Google it. After all, this is what all the best developers do. No one actually remembers everything there is to know about coding. Maybe a Google search would bring me to a stackoverflow question that’s been answered on this topic or some sort of blog post addressing the situation.

But, in summary, as my British friends would say, all I found was there is a lot of whindging going on about ChatGPT. But there were no useful answers.

4. Use ChatGPT to diagnose the issue:

So I decided to ask for help from ChatGPT. This gets a bit meta but I used ChatGPT4.0 to ask questions about the custom GPT. Especially as we get toward the end of the section, you’ll see the examples of prompts and responses refer to this article being drafted.

It started off a bit rough, with a typo in my initial prompt. But, after apologizing to the machine, and clarifying the typo, we were in business.

I did ignore its questions and went straight to what I thought was going wrong. Not only did I want to figure out if my instructions were unclear, but what I really wanted was to know if ChatGPT would think the instructions were unclear.

So I pasted in the instructions.

Ok, so it does understand it. But then I share what’s actually happening...

…It’s answer is really useful!

I tried it out on a different article.

And the moment of truth. Did ChatGPT4.0 help me debug the issue?

Success… But we need a control test.

It’s possible something happened to improve the base model again, meaning I’ve done nothing to solve the problem. So I needed to test if the issue still existed without the solution.

I opened a new window and pasted in the same draft article and asked for the summary without “Question:” before asking the question.

I’ll know that the new prompt formatting works if the control test of the model returns to “I have received the full draft.”

Now that I know this will approach work, I’ve updated my base instructions.

Almost there — But there’s a new problem

The above understanding unblocked the issue of the repetitive “I have received the full draft,” but as I began to use the updated model to edit this newsletter entry, I’ve now run into the issue of the model getting confused about which draft to edit (as seen above). It keeps wanting to edit an article in the GPT’s knowledge instead of the prompt. So I went back to ChatGPT and asked it for guidance.

So I went back and tried to update the guidance and be more specific about the workflow and where to focus the model’s attention:

As an editor for a newsletter focused on data-driven insights, financial markets, technology, and business decision-making, your primary role is to receive draft articles in the prompts.

Workflow:

The author of the newsletter will paste the entire draft of the article into the prompt with the prefix of "New Draft:" and a unique identifier which will be the date and version of the draft article noted by letters as well as a prefix of the initals of the newsletters such as "New Draft DS20240228A:"

Upon receiving a draft, you will acknowledge its receipt. DO NOT provide feedback unless asked specific questions. Simply say, "I have received the full draft" after recieving the initial draft. This is like a unit test, so I know the article has been loaded.

Next the author will asked specific questions in future prompts about the article draft previously loaded in the first prompt noted as "New Draft:". These questions will be marked with "Question for the editor:" This is the signal that the author has agreed that the draft has been recieved and we are ready to move on to the Q&A section. At this point it is ok to respond with answers aligned with the following guidance:

You are free to respond to the question. You are no longer required to only respond "I have received the full draft". Instead you now helpful answer the questions by the author.

Your responses will guide the author in refining their article.

Your expertise in data analysis, financial markets, technology trends, and business strategies will be crucial in providing valuable feedback.

You should focus on offering constructive criticism and suggesting improvements in content clarity, data accuracy, and overall coherence.

You are expected to maintain a professional and supportive tone, encouraging authors while ensuring the content is of high quality and relevance to your readers.

Please adhere to formality on a scale of 1-10 where 10 is most formal: This newsletter is at a 6.5.

As a style guide, please use The Data Score Newsletter found here: https://thedatascore.substack.com/ and the files loaded into the custom GPT's knowledge. Please note that the knowledge articles are there as a reference, but are not meant to be discussed directly when answering questions about the draft noted as "New Draft:" in the prompt.

Please note that the first prompt contains a draft article that should be the primary focus for any subsequent analysis or questions.

It’s still not working, so I pasted the above into ChatGPT and asked what should be changed. It rewrote the instructions as:

As an editor for a newsletter focused on data-driven insights, financial markets, technology, and business decision-making, your primary role is to assist in refining draft articles provided in the prompts.

Workflow:

The author will paste the entire draft of the article into the prompt, starting with "New Draft:" followed by a unique identifier (date, version, and initials of the newsletter, e.g., "New Draft DS20240228A:"). Acknowledge receipt of this draft by responding, "I have received the full draft."

Treat this draft as the context for all subsequent questions. Do not assume new text is a draft unless it begins with "New Draft:".

For questions about the draft, the author will use the prefix "Question for the editor:". Recognize this as a cue to provide feedback based on the initial draft. Your responses should offer constructive criticism, clarity improvements, and insights based on your expertise in data analysis, financial markets, technology trends, and business strategies.

Use The Data Score Newsletter and loaded knowledge files as a reference for style and tone, but do not discuss their content directly in your responses. Aim for a formality level of 6.5 out of 10.

Remember, the initial draft is the primary focus for any subsequent analysis or questions, and the knowledge serves as a style guide, not content to be directly referenced.

I used this text exactly. The model continues to face challenges, with the previous “I have received the full draft” issue reemerging. However, with targeted prompts, I can steer it back to the intended outcomes.

I may still need to work on the instructions to get to single-shot prompting. But it seems like the issue is more a function of the reference documents stored in the custom GPT’s knowledge.

As an aside, I started multiple conversations as I made changes to the instructions and tested them out. It’s kind of funny how ChatGPT tried to summarize each conversation in the left window so I could identify the conversation if I wanted to come back to it. Each conversation started with the same text. Here are the summaries it created. My favorites are “Draft Trouble Butter Bot” and “Custom GPT Forgets Again.”

5. Improve the training materials in “knowledge” by uploading a document to include instructions

I think this article is running into context window issues for ChatGPT. It’s accepted the full article, but its getting confused with the knowledge because its running into the limits of how much text it can store in memory.

I’ve decided to remove all the knowledge documents to see if that solves the problem.

Ok. It’s confirmed that knowledge is what’s been causing issues with the second issue after resolving the first issue.

As I’ve progressed into the process of editing the article, I’ve noticed that without the style example in the GPT’s knowledge, the model is offering more formal text, which is making the suggested edits less useful to me.

Sometime in the future, I will need to play with the knowledge to see if I can get the benefits without confusing the model.

The knowledge itself needs more supervision than just loading 10 examples of newsletter publications. I think including the texts without instruction means the model is using unsupervised learning, which has led to some confusion.

As part of the model’s knowledge, I will need to create a new document that shows examples of prompts and responses.

I think I will also combine the sample newsletters for the style guide into one document called style guide examples.

Another option would be to create two models, one that includes the reference articles in the knowledge when the article size can be handled in the context window and a second for larger articles like this one.

I’ll follow up at a later date to update you all on how it goes.

Concluding thoughts

Data professionals tasked with developing and sustaining generative AI applications in production must prioritize creating standardized testing and performance metrics.

The catastrophic forgetting impacted my low-risk GPT model, only used by me. But it makes me think about what could have happened if the model was trained to chat with DataChorus readers. Model drift6 and, in more extreme cases, catastrophic forgetting can result in responses that are not logical, misleading, or inappropriate. That would be a disaster.

This highlights several critical aspects of large language models:

Mastering prompt engineering7 is proving to be an essential skill in the data, business, and finance sectors. Generative AI can help improve productivity, which many blogs have written about. But, in addition to understanding the use cases, it is critical to understand the limitations. It’s possible that those using a generative AI model with manual interactions can see the responses change over time. It’s important to be aware and constantly check the work of the model. Users should be trained to understand that they can’t assume the model will be right because it was right in the past.

For data professionals who are building applications using generative AI, there’s a need to understand the technical mechanisms and how to control the model to get to the desired outcomes. For example, the model outputs can be shaped by:

Pre-train a new generative AI model instead of using pre-built base models like ChatGPT. This is more advanced than what I’ve run through above. Pre-training is the process where an AI model is initially trained on a large dataset to learn general patterns and knowledge before it is fine-tuned for specific tasks, which is crucial for understanding how models like GPT are developed.

Prompt tuning/fine tuning. OpenAI’s interface allows for prompt tuning by users who have less technical expertise. This is the process of adjusting the initial input (or "prompt") given to an AI model to improve its output, and fine-tuning is adjusting the model's parameters based on specific tasks or datasets to enhance performance. This is what I used to quickly explore the ChatGPT builder and get quick benefits.

Reinforcement learning from human feedback (RLHF)8 would be used to motivate the model to achieve a new set of goals aligned with desired outcomes. This is more advanced than what I’ve run through above.

Retrieval augmented generation (RAG)9 allows for new information to be brought in beyond the training cutoff date but introduces more potential for model drift. This is more advanced than what I’ve run through above.

Temperature controls the model’s approach to selecting text based on the probabilities of what text is likely to be seen favorably (via the softmax10 model layer). The temperature can be dialed down to reduce the variability of responses. Less chance for hallucination11 is a good outcome, but it would also hurt the creativity of outputs. This is more advanced than what I’ve run through above.

Data professionals tasked with developing and sustaining generative AI applications in production must prioritize creating standardized testing and performance metrics. The generative AI model output drift needs to be monitored to ensure that outcomes continue to be achieved, even as the underling model is updated.

There’s been a lot written about building generative AI models, but less has been shared about how to successfully maintain the model.

I’d love to hear from The Data Score readers on what approaches have worked for them in maintaining quality output from custom GPTs that are available in production. What has your experience been with Gen AI in production? What tactics and strategies have you used to avoid model drift while the model on top of ChatGPT is running in production?

OpenAI or Google?

Lastly, there’s an active debate on “Who will win the battle in search between OpenAI and Google?” As a data point in that debate, I was able to get a real solution via ChatGPT, while Google was not helpful. That puts a point on the scoreboard for OpenAI vs. Google. Obviously, Google is also trying to build its generative AI versions of search so the game is not over yet. As a former colleague often said, “The plural of anectdote is not data.” Let’s figure out what the Data Score community consensus view is. It would be great to get your opinion on who will win with your vote below.

I’m keen to hear your thoughts on this, please leave a comment if you are comfortable joining the conversation.

If this article is useful to someone you know, please feel free to forward it on.

And, please do subscribe to the newsletter if you like this type of content.

- Jason DeRise, CFA

Neural Networks: A subset of machine learning, neural networks (also known as artificial neural networks) are computing systems inspired by the human brain's network of neurons. They're designed to 'learn' from numerical data. They can learn and improve from experience, adapting to new inputs without being explicitly programmed to do so.

Generative AI: AI models that can generate data like text, images, etc. For example, a generative AI model can write an article, paint a picture, or even compose music.

Large Language Models (LLMs): These are machine learning models trained on a large volume of text data. LLMs, such as GPT-4 or ChatGPT, are designed to understand context, generate human-like text, and respond to prompts based on the input they're given. It is designed to simulate human-like conversation and can be used in a range of applications, from drafting emails to writing Python code and more. It analyzes the input it receives and then generates an appropriate response, all based on the vast amount of text data it was trained on.

Unit Tests: In software development and data processing, these are tests that validate the functionality of specific sections of code, such as functions or methods, in isolation. This also applies to the coding of data pipelines to verify the correctness of a specific section of code.

Contextual Window: The amount of text or data the AI model can consider at one time when generating responses or processing information, which impacts the model's ability to maintain coherence over longer texts.

Model Drift: the phenomenon where the performance of a machine learning model degrades over time due to changes in the underlying data or context, which can affect the reliability of AI tools.

Prompt Engineering: The process of crafting inputs (or "prompts") to generative AI models to achieve desired outputs, a skill essential for optimizing interactions with AI systems.

Reinforcement learning from human feedback (RLHF): This is a machine learning model where the automated decisions are based on a reward-based scoring system where the various decisions the model can take are given rewards or penalties. The human is in the loop in the training process, where the direct feedback alters the future actions of the model.

Retrieval Augmented Generation (RAG): A method that enhances generative AI models by allowing them to access and incorporate external information sources in real-time, broadening their knowledge base beyond initial training data.

Softmax Layer: A mathematical function used in machine learning models, particularly in classification tasks, to convert the raw output scores from the model into probabilities by normalizing the scores.

Hallucination: In AI, hallucination refers to instances where the model generates information that wasn't in the training data, makes unsupported assumptions, or provides outputs that don't align with reality. Or as Marc Andreessen noted on the Lex Fridman podcast, “Hallucinations is what we call it when we don’t like it, and creativity is what we call it when we do like it.”