Fluent in Science and Language: The Future of AI-Ready Talent

Generative AI is reshaping knowledge work, including the world of data insights. Success depends on mastering both reasoning and communication.

Generative AI1 is reshaping knowledge work by automating tasks once thought to require human judgment and language fluency. While the tools are evolving quickly, the more important question is how people should adapt in order to thrive alongside them.

For both students and professionals, success now depends on mastering two core skills: logic and language. A STEM (Science, technology, engineering, and mathematics) degree alone is no longer sufficient. In today’s AI-driven world, clear communication is the key to unlocking value. Those who combine technical expertise with the clarity and precision often developed through humanities studies, for example, English majors studying in the US, are better positioned to lead.

This article argues that fluency in both reasoning and expression is the new competitive edge. First, we examine how AI’s limitations reveal the enduring power of human language. Second, we explain why deep subject expertise is essential for evaluating and improving AI outputs. Third, we demonstrate how well-constructed prompts are critical to getting accurate, reliable results. We close by looking ahead to a future of human-AI collaboration that will rely more than ever on a blend of technical depth and communication skill.

Welcome to the Data Score newsletter, composed by DataChorus LLC. The newsletter is your go-to source for insights into the world of data-driven decision-making. Whether you're an insight seeker, a unique data company, a software-as-a-service provider, or an investor, this newsletter is for you. I'm Jason DeRise, a seasoned expert in the field of data-driven insights. I was at the forefront of pioneering new ways to generate actionable insights from alternative data. Before that, I successfully built a sell-side equity research franchise based on proprietary data and non-consensus insights. I’ve remained active in the intersection of data, technology, and financial insights. Through my extensive experience as a purchaser and creator of data, I have gained a unique perspective, which I am sharing through the newsletter.

1. Language is the Key to Gen AI

Precision in language unlocks Gen AI’s true capabilities, because machines cannot infer unstated human intentions.

Generative AI’s most powerful interface is language. But it can’t “understand” the way humans do. This section explains how breakthroughs in human cognition reveal the boundaries of what AI can replicate and why precise communication unlocks its real value. We'll also examine recent research on how AI models simulate reasoning without truly grasping intent, reinforcing the need for clear language.

Sound logic and field-specific expertise are the antidotes

Machines are better at processing language literally.

AI appears intelligent but lacks true social inference.

Max Bennett's human intelligence evolution shows language was the final, most powerful breakthrough.

Max Bennett’s exploration of human intelligence offers important context for understanding what AI can, and cannot, replicate. In Max Bennett’s book “A Brief History of Intelligence: Evolution, AI, and the Five Breakthroughs That Made Our Brains,” he discussed the 5 breakthroughs of human intelligence.

These breakthroughs reveal skills that AI still struggles to emulate, despite its apparent fluency.

Steering and the First Bilaterians

Reinforcing and the First Vertebrates

Simulating and the First Mammals

Mentalizing and the First Primates

Speaking and the First Humans

https://www.amazon.com/Brief-History-Intelligence-Humans-Breakthroughs/dp/0063286343

Gen AI mimics later breakthroughs but misses true "mentalizing.”

These breakthroughs highlight the limits of current AI capabilities. What stands out to me is breakthrough #4. While AI can mimic elements of the other stages in a limited, programmatic way, large language models seem to have leapfrogged steps 1 through 4, appearing “real” purely through language.

Yet breakthrough #4 requires a deeply human capacity, one that current AI lacks: social understanding and the ability to infer unstated intentions.

Large language models have jumped past stage 4 in such a convincing way that many models are easily passing the Turing Test2. Communication is very convincing, especially as the voice-to-voice interactions become more natural.

But the model cannot intuitively know what is intended by the communication. It must take it at face value.

Perhaps when the models can understand unstated motivations accurately, we will witness AGI. But until then, the ability to communicate accurately is the critical element to unlock the benefits of generative AI.

A quick shout-out to Michael Mauboussin, who recommended the book on LinkedIn. https://www.linkedin.com/posts/michael-mauboussin-12519b2_some-of-my-favorite-books-from-2024-in-no-activity-7279861840367480833-bhhj?utm_source=share&utm_medium=member_ios&rcm=ACoAAADPAasBZqih8svzG-osFOR2FbFtn-PGLFs

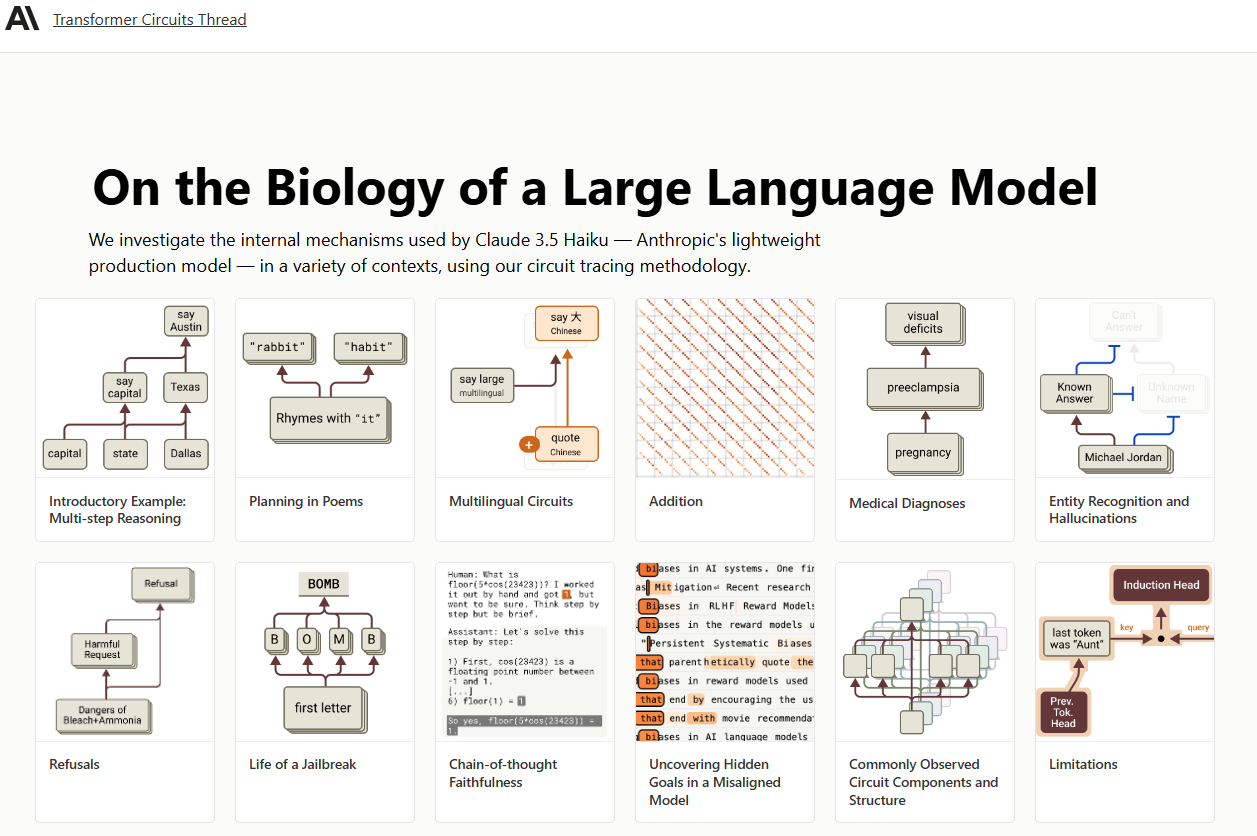

Anthropic used a biological analogy to explore how their models work internally

The article "On the Biology of a Large Language Model" examines the internal workings of Anthropic's Claude 3.5 Haiku model using a method called attribution graphs, which they use as an “analogous to neuroscientists producing a “wiring diagram” of the brain.” Attribution graphs are visual maps used to trace how components inside a machine learning model interact during a task. These graphs help trace the model's decision-making processes by identifying and mapping interactions between internal features, akin to understanding biological systems by studying cells and their interactions.

The authors present several case studies demonstrating the model's internal mechanisms:

Multi-step Reasoning: The model performs intermediate reasoning steps internally, such as determining that the capital of the state containing Dallas is Austin by first recognizing that Dallas is in Texas.

Planning in Poems: When generating poetry, the model plans ahead by selecting potential rhyming words before constructing each line, influencing the line's composition.

Medical Diagnoses: The model internally considers possible diagnoses based on symptoms and uses this information to ask follow-up questions, mirroring a diagnostic reasoning process.

While the analogy between model features and biological cells isn't perfect—since features in models are less well-defined—the approach provides insights into the complex interactions within language models. The study offers a framework for hypothesizing about these mechanisms, though it acknowledges limitations and the need for further validation.

Perhaps this will lead to additional breakthroughs in understanding how the models work, perhaps unlocking parallels to the other breakthroughs in human intelligence.

https://transformer-circuits.pub/2025/attribution-graphs/biology.html?utm_source=pocket_saves

Large Language Models are still a black box and language is the key to understanding it

With even Anthropic trying to understand how it’s possible for the models to do the things they do, we are left with language as the primary mechanism for extracting the value from AI.

2. Technical knowledge remains essential

How to know if your co-pilot is hallucinating

Even the most advanced language models are confident communicators, but that doesn’t mean they’re correct. Users need deep domain knowledge to avoid being misled by polished but incorrect AI outputs. This section shows why technical expertise is critical for detecting AI hallucinations3, debugging output, and knowing when to trust the co-pilot.

Group projects offer a relatable analogy for understanding how AI can sound convincing even when it’s wrong. Thinking back to college and being put on a group project, students were given human co-pilots to figure out tough problems. When you are working on a group project, you might be able to pick up the clues that your real-life group project partners may not have the answers needed. It’s hard for humans to be convincing that they know the answer when they really don’t. Of course there are exceptions when charismatic people are communicating a confidently wrong answer. But most of the time it’s easy to figure out that it’s going to be one of those groups where 80% of the work is done by one or two members.

It's harder to detect errors when Gen AI responds with polished language and unwavering confidence. That’s why you need a STEM degree. Sound logic and judgment based on expertise in a field are the antidote for the charismatic and potentially perceived all-knowing bot that’s occasionally confidently wrong.

A consensus among professionals who use AI to augment their work is that they do so with the confidence that they can take the good and cut (or redirect) the bad outputs of AI. But when they use an LLM4 in a space they aren’t confident in, they have a hard time knowing which, if any, parts of the output are right, wrong, or misstated.

Deep subject knowledge is essential to use AI effectively. Otherwise, the AI’s work may need to be re-researched to confirm its accuracy as a human-in-the-loop, or the user of the AI-generated research will take the risk of drawing incorrect conclusions.

Will AI replace or augment knowledge workers?

Many people across the industry are wrestling with the concepts of vibe coding, the future of knowledge workers, what skills young professionals should focus on and how much to depend on AI

Joe Reis recently joked in a recent article in his “Nerdy Rants” series that “I Don’t Need to Learn Anything Anymore.” In the article he challenges the notion that one can just use AI to achieve expertise.

“With the increased use of AI for everything from writing emails, vibecoding5, and task automation, I’m curious about the long-term impacts on professional expertise and depth. What does it mean to be an expert if you’re outsourcing your critical thinking and work to an AI? If you don’t have sufficient depth in a field or subject, how will you know if AI provides you with the correct answers, writes high-quality and performant code, or takes the right actions?”

“I recently used a popular vibecoding tool to create a web app for my wife. She’s always wanted a “virtual closet” to recommend clothes based on her mood and day of the week. On the surface, the app was a passable MVP. When I looked under the hood, the code was pretty bad. Weird variable naming conventions and very inefficiently structured code. As a software engineer, I’d probably spend more time understanding the code and refactoring this mess. It would be easier to code (with or without AI) and create it from the bottom up. At least if I create the app from scratch, I know its quirks and nuances. If I add a feature, I know what it’s supposed to do and why it’s written the way it is. It might take a bit more time, but I’m not getting extra credit for cranking out shitty apps. If I didn’t have a background in software and know what to look for, I’d probably just let this pass.”

“In a time when it’s easier than ever to outsource nearly everything to AI, I urge you to take your profession seriously. Enjoying the struggle is what it means to grow as a professional. Invest in your skills. Read books. Push yourself to learn something difficult. Do hard stuff. Be human.”

I also appreciate his reference to the movie Idiocracy!

Omri Shtayer recently posted on LinkedIn about “vibeskilling” instead of vibecoding, encouraging users to leverage AI to learn and try new things to establish new skills. Or as he commented.

“Be Chuck”

As noted in a recent AI Snake Oil post, AI as Normal Technology by Arvind Narayanan and Sayash Kapoor,

“For example, while GPT-4 reportedly achieved scores in the top 10% of bar exam test takers, this tells us remarkably little about AI’s ability to practice law. The bar exam overemphasizes subject-matter knowledge and under-emphasizes real-world skills that are far harder to measure in a standardized, computer-administered format. In other words, it emphasizes precisely what language models are good at—retrieving and applying memorized information. More broadly, tasks that would lead to the most significant changes to the legal profession are also the hardest ones to evaluate. Evaluation is straightforward for tasks like categorizing legal requests by area of law because there are clear correct answers. But for tasks that involve creativity and judgment, like preparing legal filings, there is no single correct answer, and reasonable people can disagree about strategy. These latter tasks are precisely the ones that, if automated, would have the most profound impact on the profession.

Benedict Evans weighed in on Deep Research’s demo to announce the capability to the world, which in one case used an example of smartphone adoption globally. Benedict is an expert in mobile, media, and technology. So when he saw the content, his excitement about the possibilities disappeared quickly because he saw the sources used had flawed methodology. He’s an expert, so he quickly saw the “convincing” research presentation was flawed at the core.

“there are only so many times that I can say that these systems are amazing, but get things wrong all the time in ways that matter, and so the best uses cases so far are those where the error rate doesn’t matter or where it’s easy to see.”

https://www.ben-evans.com/benedictevans/2025/2/17/the-deep-research-problem

Prof. Ethan Mollick weighed in on the importance of expertise in light of the breakthroughs in reasoning models, especially with the narrow focus of the Deep Research agent’s quality outputs in “The End of Search, The Beginning of Research.”

“The Reasoners provide the intellectual horsepower, while the agentic6 systems provide the ability to act. Right now, we're in the era of narrow agents like Deep Research, because even our best Reasoners aren't ready for general-purpose autonomy. But narrow isn’t limiting - these systems are already capable of performing work that once required teams of highly-paid experts or specialized consultancies.

These experts and consultancies aren't going away - if anything, their judgment becomes more crucial as they evolve from doing the work to orchestrating and validating the work of AI systems. But the labs believe this is just the beginning. They're betting that better models will crack the code of general-purpose agents, expanding beyond narrow tasks to become autonomous digital workers that can navigate the web, process information across all modalities, and take meaningful action in the world. Operator shows we aren’t there yet, but Deep Research suggests that we may be on our way.”

Prof. Ethan Mollick in “Speaking Things Into Existence” also emphasized the importance of expertise in “vibeworking” and also noted how work is changing.

“Work is changing, and we're only beginning to understand how. What's clear from these experiments is that the relationship between human expertise and AI capabilities isn't fixed. Sometimes I found myself acting as a creative director, other times as a troubleshooter, and yet other times as a domain expert validating results. It was my complex expertise (or lack thereof) that determined the quality of the output.”

Addy Osmani in “Vibe Coding is not an excuse for low-quality work” wrote about rules for coders who are leveraging AI in writing code. The key is that you have to understand coding better than the co-pilot to be able to responsibly leverage the code generated by the machine.

“Never accept AI-written code into your codebase unreviewed. Treat it like code from a new hire: inspect every line, ensure you get it. If something doesn’t make sense to you, don’t assume the AI knows better – often it doesn’t. Either refine the prompt to have the AI clarify, or rewrite that part yourself.” But, Addy also offers some great use cases such as “Rapid prototyping is perhaps the sweet spot of vibe coding. If you have an idea for a small app or feature, using an AI assistant to throw together a quick prototype or proof-of-concept can be incredibly effective. ”

The Analytics Power Hour podcast recently had an all hands on deck discussion about AI and analysts talking through these topics around expertise and interpreting what the AI is generating, and what the role of humans is in the process, especially with all the pain points. At 32 minutes in, Moe Kiss captures something really well that’s happening because of AI. She notes how easy it is for someone to take a half-baked idea, throw it into an LLM and get a long document, which really waters down the idea, but now there’s more for her to read through. It sounds like she’s ok with someone doing their own thinking and creating a document on their own but getting help from AI to refine it (thankfully I would pass that test, my writing method is outlined here). But she is frustrated by fully AI-generated content without much human effort. The others joked that the solution is to put that AI-generated document into an AI and turn it into one sentence for her to comment on.

(Note, May 2, 2025: Full disclosure, I heard the podcast after publishing my article and thought it was so good that I had to go back and add it as a resource for thinking through this challenge of human expertise and communication.)

https://analyticshour.io/2025/04/29/270-ai-and-the-analyst-weve-got-it-all-figured-out/

3. Expert Informed Prompting Matters as Much as Data

While training data matters, the single biggest factor in the quality of AI output today is how you ask. This section explores why prompt engineering7 is a skill rooted in domain expertise and clarity of intent. We’ll show how language choices, structure, and ambiguity affect outcomes, backed by both research and real-world case studies.

AI: Data Garbage in, garbage out? Let’s look at the prompts more than the training data

Certainly it is true that poor-quality data will lead to poor-quality output. In the early journey of large language models leveraging web-based text (May 2024 seems like a lifetime ago in Gen AI development), Google’s AI (then Bard) suggested using non-toxic glue to keep cheese from sliding off pizza, which was based on a joke Reddit post from 12 years ago (https://www.reddit.com/r/Pizza/comments/1a19s0/my_cheese_slides_off_the_pizza_too_easily/). Classic garbage in, garbage out.

In reality, the real challenge often lies in crafting prompts that clearly define the intended outcome. The prompt request could have focused on the cooking technique or a focus on which common ingredients combine together best for the outcome.

Since then the reasoning models have been made available to the market, which have shown the ability to follow chain-of-thought reasoning to generate more accurate answers. The models are being trained to improve performance on key benchmarks that show the model’s “intelligence.”

Vin Vashishta recently wrote about the inner workings of large language models, looking at the ability of the models to pass benchmark tests, but also appropriately cautioning against over-extrapolating from those impressive advances.

“The tendency toward hyperbole in research is profound and threatens to undermine the field’s credibility. Prompting strategy has a huge impact on benchmark performance, and that can be used to influence evaluation outcomes. From my own experience, domain experts tend to write better prompts, clarifications, and follow-up requests when they’re working in their domain. That means a math expert will get better performance out of any LLM when working through math problems.” - Vin Vashishta

Academic research supports the importance of properly worded prompts benefiting the quality of the output.

Wang et al. (2023) in "Are Large Language Models Robust Learners? An Empirical Study on Robustness to Input Perturbations" systematically tests LLMs against various types of input noise. Their experiments typically show that while modern LLMs (especially larger ones) possess a degree of robustness (i.e., performance doesn't completely collapse) against common, minor errors (like typos frequently seen in training data), performance generally degrades as the severity or frequency of errors increases. Significant grammatical errors or structural noise often lead to larger drops in accuracy or coherence scores compared to baseline performance with clean prompts.

https://openreview.net/pdf?id=rWSiBknwQa

Research on Chain-of-Thought (CoT) prompting (Wei et al., 2022, Chain-of-Thought Prompting Elicits Reasoning in Large Language Models) experimentally demonstrated that instructing models to "think step-by-step" (a form of structured clarity) significantly improved performance on arithmetic, commonsense, and symbolic reasoning tasks compared to standard, less structured prompts. https://openreview.net/pdf?id=_VjQlMeSB_J

While modern LLMs now incorporate step-by-step reasoning techniques internally, the underlying lesson remains: clarity in prompts significantly enhances AI performance.

Similarly, tests of the ability of an LLM to withstand unclear prompts and still deliver quality results could lead to models that are able to reason through what might have been intended or ask a clarifying question before trying to answer.

Nevertheless, the quality of the prompts matters a lot.

Another sign that prompt quality matters (Plus, I question if I am learning proper English through AI?)

“Delve” has been noted by many who use AI frequently as one of the “telltale” signs that an LLM has been used. It’s not a word many people use in natural conversation (in the US at least), which catches people’s attention. I don’t use the word “delve,” but maybe I’ve been wrong the whole time. I would say “dive into” or “dig into.” It turns out that there’s a single word that means this, which is “delve.” AI outputs revealed that it's actually the precise word for certain contexts. The language models use “delve” in the output because it’s the right word to describe what is being done.

As an anecdotal point, I’ve started hearing “delve” pop up in human-to-human conversations too, suggesting AI may be subtly influencing real-world speech patterns. Is AI communication improving the accuracy of human use of English? I appreciate it could also be like the phenomenon where someone doesn’t notice how many specific make, model, and color cars are on the roads until you buy that car and you can’t help but see it everywhere.

There are other phrases and words that may be used more by LLM output, but it’s actually correct use of English. It’s just pattern matching its training materials and its reinforcement learning, which includes proper use of written language. If it generates properly written language based on its training materials, then writing prompts with proper language will help it best generate desirable output.

Testing the Theory: A quick case study and warning that I’m such a dork

Establish the baseline

I asked ChatGPT’s recently available o4-mini-high a generic prompt where I will easily be able to assess the quality of the output. I used this latest model in the reasoning series to establish the best possible quality answer. I made sure to turn on the search capabilities so it could find my relevant articles here on the data score as well as search anything else it would like.

Prompt: According to Jason DeRise, CFA what are common flaws that data companies make when selling data to investors?

The baseline result: It’s pretty accurate. It’s sourcing actual content I’ve created in this newsletter and summarizing it well.

🤖 ChatGPT o4-mini-high:

Summary

Jason DeRise, CFA highlights a set of recurring missteps that data vendors make when engaging with investors: they often begin by “getting data” rather than first clarifying the investment question ; they lump all prospects into a single “data buyer” archetype, ignoring real persona differences ; and they fail to deliver a concise “hero chart” and narrative that connects the data directly to the investment decision—leaving investors to fill in the blanks.

Common Flaws

1. Prioritizing Data Over Questions

Vendors frequently kick off engagements by focusing on obtaining datasets, rather than first refining the specific investment questions or hypotheses the data must address .

2. Treating All Buyers The Same

Many firms assume a monolithic “data buyer” and deploy one-size-fits-all materials, overlooking distinct personas—such as the Data Sentinel or Signal Strategist—which leads to stalled deals and confused prospects .

3. Offering the Wrong Deliverables

Not every analyst wants a flashy dashboard, and not every decision-maker is persuaded by case studies; pushing generic collateral onto all buyer types creates unnecessary friction in the sales cycle .

4. Neglecting the “So What?”

Instead of leading with a clear, concise hero chart and summary that tie the data back to the critical investment implication, vendors often dump raw numbers on investors, forcing them to piece together the logic themselves .

5. Overwhelming Clients with “Data Deluge”

By inundating buyers with large volumes of raw, uncurated datasets, vendors risk creating a “data deluge” where key signals are obscured by noise—and investors become paralyzed rather than empowered .

6. Lacking Transparency on Methodology

Obscuring data provenance, cleaning steps, or analytical methods erodes investor trust; clear disclosure of sources and processes is crucial to accelerate due diligence and close deals .

7. Misaligned Go-to-Market Strategies

In There’s No Such Thing as a “Data Buyer,” Jason prescribes aligning GTM motions with real buyer motivations and behaviors, calling out the flaw of defaulting to generic playbooks that don’t resonate .

8. Failing to Quantify Return on Investment

His piece Assessing the ROI of Data points out that vendors often neglect to present clear ROI analyses tied to tangible investment outcomes, leaving buyers unsure how to justify the expense .

9. Ignoring Outcome-Driven Approach

In The Bull Case for Alternative Data, Jason argues that successful offerings start by defining the investor’s desired outcome and then working backward—yet many vendors skip this step and deliver data that doesn’t solve the core problem .

10. Misapplying Data to Inappropriate Use Cases

As illustrated in Satellite Data: 3 Good and 3 Bad Use Cases, touting every dataset as universally applicable backfires when certain use cases don’t align with the data’s strengths, causing wasted trials and lost credibility .

Shameless plug—here are links to the work it cited :

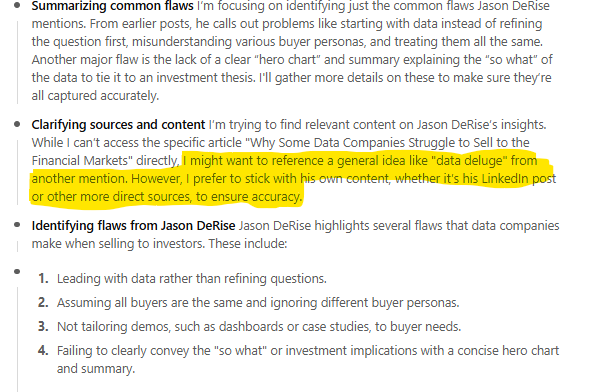

Here’s a screen grab of part of the chain of thought8 revealed. I’ll include the full reasoning transcript in the appendix. It seems like it has properly connected the reference to my work as the focus point of what to summarize. It “thought” about trying to bring in outside general data on “data deluge” but as it reasoned, it realized that would not be in line with what the prompt intended.

The slang heavy test case

Now that we’ve established a baseline, I will enter a less clearly worded prompt into a separate chat window. Ok, confession time. I asked ChatGPT to convert that question above into “Gen Alpha/Gen Z Meme Speak.” Yes, I’m such a dork. Here’s the translated question, which I’m using as the prompt.

Prompt: Bruh, Jason DeRise says these data vendors got zero rizz—what skibiddy-toilet9 moves are they pulling when they try to sell their data to investors?

If my hypothesis is right, the model won’t generate as accurate of an answer. I used the same high-octane ChatGPT o4-mini-high model, which should use the same reasoning capabilities to power through and get to a similar quality answer if the language of the prompt is not a factor. However, if the quality of the prompt is a factor, the output should be lower quality than the baseline.

The result:

The output did not source any of my work. Instead of sourcing the Data Score, it sourced many sources off the web.

Not satisfied I tried it again in a new window with the same prompt, model, and features. and got a different but completely inaccurate result, sourcing none of my work.

I think it’s getting confused by the prompt, thinking that the request is independent of the reference to me. It’s looking at it as two independent thoughts: (1) “Bruh, Jason DeRise says these data vendors got zero rizz” is the first thought and (2) “what skibiddy-toilet moves are they pulling when they try to sell their data to investors?”

When examining the chain of thought process revealed by the model, it doesn’t think to connect the answers back to my work.

None of the below references my work. I don’t want to comment on the quality of the articles and websites that the LLM decided to reference, but safe to say it completely missed the goal of the prompt by not sourcing any of my work. In addition, it was far more wordy, and lacked focus compared to answer returned for the baseline prompt.

🤖 ChatGPT o4-mini-high:

At a high level, data vendors often default to techno-speak by leading with dataset size and update frequency rather than tying features to investor use cases and value creation (Data Commercialization — 3 Common Mistakes to Avoid and 5 ...). They dump raw tables and dashboards full of numbers without distilling them into actionable insights, leaving investors overwhelmed by noise instead of pinpointed signals (Data-Driven Selling: 3 Common Mistakes (and How to Stay on Track)). Many sprinkle their pitches with unverified alpha-boosting claims that savvy investors immediately question (Common Mistakes When Talking To Investors - CAV Angels). On top of that, they pepper presentations with jargon and acronyms, forgetting to humanize the discussion or qualify investor needs (7 Most Common Mistakes People Make When Pitching Their Business). When it comes to visualization, their dashboards often lack context—no benchmarks, no drill-downs—so the “so what?” never gets answered (Data-Driven Selling: 3 Common Mistakes (and How to Stay on Track)). Commercially, rigid pricing structures and poor benchmarking lead to sticker shock or drawn-out haggling (Selling data to investment managers – What you need to know), and rushing to share unclean data during due diligence only backfires when errors surface (The Pitfalls of Prematurely Sharing Data with Investors). Post-sale, underinvesting in customer service leaves investors stranded on onboarding hiccups (12 Pitfalls to Avoid When Implementing Data Products), while one-size-fits-all datasets without customization cement the vendor’s zero-rizz reputation (The One Thing Every Successful Data Vendor Is Doing).

The Narrative & Context Mistakes

Tech Specs, No Storytelling

Data vendors frequently open with technical specs—how many gigabytes, how often it updates—without explaining why that matters for portfolio optimization or risk management (Data Commercialization — 3 Common Mistakes to Avoid and 5 ...). This “feature dump” leaves investors asking “so what?” instead of “shut up and take my money.”

They also confuse raw data for insights, handing over dashboards loaded with tables instead of a concise narrative that guides the investor to the signal in the noise (Data-Driven Selling: 3 Common Mistakes (and How to Stay on Track)). Without a clear story, even the most powerful datasets flounder in the hands of time-pressed decision-makers.

Overhyped, Unverified Claims

Some vendors lean on flashy promises—“Our dataset boosts alpha by 20%!”—with no transparent methodology or back-tested evidence to support the claim (Common Mistakes When Talking To Investors - CAV Angels). Investors have zero tolerance for that sort of hype without footnotes.

Worse, presentations often read like a hieroglyph soup of acronyms and domain jargon, ignoring the human element of the pitch and alienating non-technical investors (7 Most Common Mistakes People Make When Pitching Their Business). Building rapport and understanding client pain points should come before dropping the next three-letter acronym.

Delivery & Visualization Flaws

Cluttered Dashboards & No Context

When vendors finally demo a dashboard, it’s usually a firehose of charts with no benchmarks or drill-downs—just raw metrics that require a PhD to interpret (Data-Driven Selling: 3 Common Mistakes (and How to Stay on Track)). Investors need to see at a glance whether a signal is good, bad, or neutral; without that, the dashboard might as well be a blank screen.

Even after feedback, many vendors double-down on the same format instead of iterating, brushing aside investor critiques and leaving the next demo as disappointing as the first (7 Most Common Mistakes People Make When Pitching Their Business). True partners evolve based on client input, not ignore it.

Under-Resourced Support

Post-sale support is often an afterthought, with minimal investment in customer service or dedicated relationship managers (12 Pitfalls to Avoid When Implementing Data Products). When investors hit a snag on data ingestion or have questions about transformations, radio silence turns “sold” into “never again.”

A robust success team that helps clients onboard, troubleshoot, and extract maximum value is table stakes—anything less tank sales renewals and referrals.

Commercial & Operational Pitfalls

Rigid & Opaque Pricing

Data vendors love to roll out one-size-fits-all pricing packages that aren’t benchmarked against market alternatives or tailored to investor budgets (Selling data to investment managers – What you need to know). The result? Sticker shock, drawn-out negotiations, and often a walk away.

Transparent, flexible pricing—think seat-based tiers, data modules, or usage-based fees—lets investors pay for exactly what they need without sticker-shock level tension.

Premature Data Dumps

In a scramble to satisfy due-diligence requests, vendors sometimes dump raw, unfiltered data too early, only for inaccuracies and outliers to surface and erode trust (The Pitfalls of Prematurely Sharing Data with Investors). A partially cleansed dataset can do more harm than good.

Instead, controlled sample sharing with clear caveats and a roadmap for full delivery once quality gates are passed maintains credibility throughout the investor review.

No Customization or Collaboration

Finally, offering the same dataset to every client without customization makes vendors look like vending machines, not strategic partners (The One Thing Every Successful Data Vendor Is Doing). Investors want a vendor who’ll tweak data slices, adjust schemas, or co-develop new metrics.

Collaboration on product roadmaps and joint workshops can transform a transactional sale into a long-term partnership—anything less cements the vendor’s zero-rizz status.

4. Orchestrating the Future: Why Human Judgment Will Still Matter

As AI systems evolve into more autonomous agents capable of conducting research, reasoning through tasks, and acting on complex instructions, the need for human oversight becomes more strategic. This section explores how the next wave of AI will still depend on people with deep expertise and clear communication skills to guide outcomes, evaluate results, and refine system behavior.

Lenny’s Newsletter invited Aman Khan to guest post “Beyond Vibe Checks: A PM’s Complete Guide to Evals,” which speaks to the importance of constantly monitoring the output of AI products to ensure the quality and human alignment remain consistent.

Tyler Frieling has kicked off a video series on LinkedIn on “AI with old things,” which looks to the past to appropriately test AI outputs, with an analogy to master guildsman and leveraging the Socratic method in checker agents. https://www.linkedin.com/posts/tylerfrieling_agents-are-starting-to-emerge-in-the-workforce-activity-7320889326932828163-NxcI?utm_source=share&utm_medium=member_desktop&rcm=ACoAAADPAasBZqih8svzG-osFOR2FbFtn-PGLFs

And I’ve written multiple case studies exploring the potential for checker agents to catch errors as an aid to fill the trust gap between AI-generated content and expert users.

As more narrowly trained models are combined with specific tasks orchestrated with more advanced reasoning models, the expertise of the large language models can improve. As AI moves up the value chain, providing more advanced answers and insights, it will become more important for experts to leverage the capability to push the boundaries of what’s possible by understanding previously unanswerable questions at a deeper level than previously possible, creating novel approaches to solving the problems, outsourcing the grunt work to AI, and using expertise to make sound judgments aided by the machines. STEM and humanities studies set young adults on the right path in my opinion.

Concluding thoughts: STEM + Language Is the Future of Human-AI Collaboration

In a world increasingly shaped by machines, the human advantage lies in mastering both numbers and words. Solving novel problems, then outsourcing the repetitive work to AI, will require the precision of STEM and the clarity of the humanities. Those who bring sound judgment and refined taste to evaluating AI outputs will stand out.

A STEM degree equips you to assess the quality of AI-generated work.

A humanities background helps you communicate ideas clearly to AI and to people.

As AI systems grow more autonomous and take on more complex tasks, human oversight becomes more strategic. The individuals who thrive will be those who can frame the right questions, guide the process, and make meaningful decisions. Technical skill and language are no longer separate tracks. Together, they form the foundation of modern expertise.

- Jason DeRise, CFA

Appendix

Chain of thought log for baseline prompt:

Prompt: According to Jason DeRise, CFA what are common flaws that data companies make when selling data to investors?

🤖ChatGPT o4-mini-high Chain of Thought:

Searching for flaws in data selling The user, Jason DeRise, might be asking about flaws in how data companies sell data to investors. I now need to search for related information from Jason himself—maybe he wrote about it in his newsletter, blog, or on LinkedIn. I’ll search for "Jason DeRise CFA flaws data companies selling data to investors" to find insights directly from him. I'll focus on gathering relevant posts or articles that highlight these common flaws.

Evaluating search results I found a couple of relevant results. The best option seems to be Jason DeRise's post on The Data Score Substack titled "There's No Such Thing as a 'Data Buyer'." Another relevant piece is a LinkedIn post from about a year ago where he points out a flaw in the solutioning process: it starts with “get data” rather than first refining the questions. I’ll start by reviewing the Substack article for more insights.

Searching for common flaws I’ll go ahead and search for “Jason DeRise CFA common flaws data companies.” This should help uncover any articles, blog posts, or insights where Jason might have shared his thoughts on flaws that data companies make when selling to investors. I’ll check through relevant sources and find specific information that answers the question, ideally drawing on Jason’s own perspectives or writings.

Refining the search It seems like the search isn’t quite yielding results, so maybe I’ll try a more targeted approach. I’ll search directly on Substack using the phrase “common flaw” and the site filter for "thedatascore.substack.com." This will help me focus directly on any articles from “The Data Score” where Jason DeRise might have discussed flaws related to data selling to investors. Hopefully, this approach gives us more relevant results!

Searching LinkedIn for insights The search for flaws in how data companies sell to investors didn’t turn up the specific article by Jason DeRise. It might be worth checking if Jason has posted something about it on LinkedIn instead. I’ll search LinkedIn for "Jason DeRise data companies selling data investors," which might help me find some of his thoughts or comments on the topic. Hopefully, this leads to a relevant post!

Looking for "There's No Such Thing as a Data Buyer" insights It seems that misunderstanding buyer personas—treating all buyers the same—is a common flaw when selling data. Jason DeRise also seems to have addressed this issue in his Substack article, "There's No Such Thing as a 'Data Buyer.'" I’ll search for a summary or key points from that article to dig deeper into these flaws. Hopefully, this provides more specific insights from Jason himself.

Identifying common flaws The question asks about common flaws data companies make when selling data to investors. Two LinkedIn posts highlight some flaws: one from 1.5 years ago mentions starting with “get data” rather than refining the question, and another from last week points out that data vendors fail by misunderstanding who they're selling to—specifically, that not every buyer wants dashboards or case studies. These posts offer insight into flaws related to customer personas and how to approach data sales more effectively.

Identifying common flaws in selling data The search didn't produce much, but we found two valuable LinkedIn posts by Jason DeRise. One mentions a common flaw in starting with "get data" rather than refining the question. The other talks about data vendors misunderstanding buyer personas — not all data buyers want dashboards, for example. This is a flaw in treating all buyers the same. I’ll look for more, maybe tracking down the article titled “There’s No Such Thing as a Data Buyer” or checking Twitter.

Evaluating data vendor flaws It seems that finding a usable version of the article might be tricky. We could try clean text uploads or mobile versions, but I’m not sure it’ll work out. However, the LinkedIn posts have already provided some key insights, like flaws in treating all buyers the same and starting with data rather than refining questions. Jason DeRise has likely addressed these flaws in multiple posts, so let me look for more posts or articles by him to summarize further.

Summarizing LinkedIn posts by Jason DeRise I've identified two significant flaws Jason DeRise mentions in his LinkedIn posts:

Starting the solution process with "get data" rather than refining the question first.

Misunderstanding buyer personas — treating all data buyers the same.

Additionally, he likely discusses these issues more extensively in his article "There's No Such Thing as a Data Buyer," potentially elaborating on how data vendors misinterpret buyer motivations or misalign with investors' needs.

Identifying common flaws in data sales There are likely at least three main flaws that Jason DeRise highlights. One is pitching generic demos to an "average buyer" instead of tailoring them to different buyer types. Another flaw is the failure to provide a clear "hero chart" to connect the data to a specific investment thesis. Now, I should look for more work from Jason DeRise, especially any mention of "hero chart," to expand on these points.

Examining flaws in data sales It looks like one flaw Jason DeRise identifies is the failure to provide a concise hero chart and summary that explains the "so what" of the data, connecting it clearly to the investment thesis. His article emphasizes making those links explicit. I’ll search for that specific phrase about connecting the logic from data to investment to dig more into this flaw and find more details.

Summarizing common flaws I’m focusing on identifying just the common flaws Jason DeRise mentions. From earlier posts, he calls out problems like starting with data instead of refining the question first, misunderstanding various buyer personas, and treating them all the same. Another major flaw is the lack of a clear “hero chart” and summary explaining the “so what” of the data to tie it to an investment thesis. I'll gather more details on these to make sure they’re all captured accurately.

Clarifying sources and content I’m trying to find relevant content on Jason DeRise’s insights. While I can’t access the specific article "Why Some Data Companies Struggle to Sell to the Financial Markets" directly, I might want to reference a general idea like "data deluge" from another mention. However, I prefer to stick with his own content, whether it’s his LinkedIn post or other more direct sources, to ensure accuracy.

Identifying flaws from Jason DeRise Jason DeRise highlights several flaws that data companies make when selling to investors. These include:

Leading with data rather than refining questions.

Assuming all buyers are the same and ignoring different buyer personas.

Not tailoring demos, such as dashboards or case studies, to buyer needs.

Failing to clearly convey the "so what" or investment implications with a concise hero chart and summary.

I’ll make sure to mention these key flaws and cite them in the context of his article "There's No Such Thing as a Data Buyer."

Summarizing flaws identified by Jason DeRise Jason DeRise points out several common flaws in selling data to investors:

Focusing on data first rather than defining the relevant investment question.

Treating all buyers the same, without considering distinct buyer personas.

Offering one-size-fits-all deliverables like dashboards or case studies.

Failing to clearly communicate the “so what” — the investment implications.

Additionally, flaws like misaligned go-to-market strategies, overwhelming data with no focus, and lack of transparency on sourcing and methodology are mentioned across his writings. I'll include citations to support these points.

Done

Chain of thought log for test promptin Gen Alpha/Gen Z Meme Speak:

Prompt: Bruh, Jason DeRise says these data vendors got zero rizz—what skibiddy-toilet moves are they pulling when they try to sell their data to investors?

🤖ChatGPT o4-mini-high Chain of Thought:

Decoding the slang The user is asking about "skibiddy-toilet moves" and "zero rizz" in relation to data vendors' sales tactics. "Zero rizz" means they lack charisma, and "skibiddy-toilet moves" implies they're using poor or shady practices. So, they're looking for examples of flawed or unappealing sales strategies data vendors might use when pitching their products to investors, but phrased in a way that aligns with modern slang. Essentially, they're asking for insight into weak or ineffective sales tactics used by these companies.

Searching for sales mistakes It seems like the user wants to see information on typical mistakes data vendors make when selling their products to investors, but they want it in Gen Z slang, like using terms like "bruh." I’ll need to search for common mistakes these vendors make and find related articles from reliable sources. Since detailed citations are needed (at least 10), I’ll search for relevant terms like "common mistakes data vendors selling to investors" and analyze the results, aiming for a well-rounded, researched answer with citations after each point.

Reviewing search results I’ve found a few relevant sources. One talks about mistakes data vendors make when commercializing their data, such as focusing on technical details instead of use cases. Another source discusses data-driven selling for distributors, but the mistakes mentioned could be relevant, like confusing data with insights. There’s also an article about mistakes when speaking to investors, emphasizing the importance of backing up claims. I’ll explore these articles further and compile relevant information for the answer.

Focusing on specific sales flaws The user is asking about common mistakes data vendors make when trying to sell data to investors, referencing Jason DeRise’s perspective. They want the answer in slang, with phrases like “zero rizz” and “skibiddy-toilet moves” to describe weak sales tactics. I’ll need to look further into concrete sources that detail these flaws specifically, so I can give a well-researched answer with relevant citations. This will include mistakes like failing to back up claims or focusing on technical details instead of use cases.

Identifying sales mistakes I feel like I should pull more specific examples of sales mistakes from experts, including Randi Zuckerberg and CAV Angels. Data vendors often make the mistake of making claims they cannot back up, focusing too much on technical specs instead of relevant use cases—and they forget to connect with investors’ needs. Jargon and poor visualization don’t help either. I need more citations to round out the answer and should search for more sources about pitching data products to investors. Let’s go with "pitching data to investors pitfalls".

Identifying vendor mistakes I’m trying to pull together sources that focus on common mistakes data vendors make when selling to investors. Here's what I found:

From cdomagazine.tech: Vendors often describe data according to technical specifics, missing the focus on use cases and value.

From naw.org: They confuse data with insights, and sometimes their dashboards lack context or drill-down options.

From cavangels.com: Vendors often make unverified claims, which investors can’t rely on.

From a LinkedIn article by Randi Zuckerberg: It's about mistakes made by business founders when pitching to investors.

Identifying pitfalls in data pitches I need to highlight some common mistakes data vendors make when pitching to investors:

Talking Tech Specs, Not Business Impact: Vendors often focus on technical details (like dataset size or real-time updates) instead of explaining how it benefits investors in terms of business outcomes.

Confusing Data with Actionable Insights: They sometimes overload investors with raw data and visuals without framing it within a meaningful story, leaving them lost.

Unverified or Overhyped Claims: Vendors may make exaggerated claims (like claiming data boosts alpha by 20%) without proving it, quickly turning off potential investors.

Identifying vendor mistakes Data vendors make several key mistakes when pitching to investors:

Ignoring the Investor's Human Context: Too much jargon and lack of rapport-building, leaving investor needs unqualified.

Poor Visualization and Dashboard Design: Cluttered, non-integrated dashboards that lack actionable context.

Pricing Pitfalls: Rigid, opaque pricing without market comparisons or budget tailoring, causing frustration.

Premature Data Sharing: Sharing unverified or incomplete data damages credibility.

Lack of Customization: One-size-fits-all products that don’t cater to investor needs.

I’ll cite each point to back it up.

Done

Generative AI: AI models that can generate data like text, images, etc. For example, a generative AI model can write an article, paint a picture, or even compose music.

Turing Test: A test proposed by Alan Turing to assess a machine's ability to exhibit human-like intelligence in conversation.

Hallucination: In AI, hallucination refers to instances where the model generates information that wasn't in the training data, makes unsupported assumptions, or provides outputs that don't align with reality. Or as Marc Andreessen noted on the Lex Fridman podcast, “Hallucinations is what we call it when we don’t like it, and creativity is what we call it when we do like it.”

Large Language Models (LLMs): These are machine learning models trained on a large volume of text data. LLMs, such as GPT-4 or ChatGPT, are designed to understand context, generate human-like text, and respond to prompts based on the input they're given. It is designed to simulate human-like conversation and can be used in a range of applications, from drafting emails to writing Python code and more. It analyzes the input it receives and then generates an appropriate response, all based on the vast amount of text data it was trained on.

Vibecoding: Computer scientist Andrej Karpathy, a co-founder of OpenAI and former AI leader at Tesla, introduced the term vibe coding in February 2025. The concept refers to a coding approach that relies on LLMs, allowing programmers to generate working code by providing natural language descriptions rather than manually writing it. https://en.wikipedia.org/wiki/Vibe_coding

Agentic AI Frameworks: A type of AI system that autonomously makes decisions and executes tasks with minimal human intervention, often used in data-driven workflows to enhance efficiency and automation. An example could be a large language model chat model used as an interface that calls other AI models depending on the prompt and, in turn, leverages other specific AI agents that handle specific tasks to enable the outcome.

Prompt Engineering: Prompt engineering is the process of iterating a generative AI prompt to improve its accuracy and effectiveness. https://www.coursera.org/articles/what-is-prompt-engineering

Chain-of-Thought (CoT) Prompting: A technique that guides AI models to reason step-by-step, improving complex problem-solving.

Wait is it “Skibidi” or “Skibiddy”? ChatGPT may have failed me on the spelling of this Gen Alpha/Gen Z meme speak. Either way, this jargon term isn’t getting added to the Jargonator, which tracks all jargon used in The Data Score.